Data Pipeline Models

Sequence Model

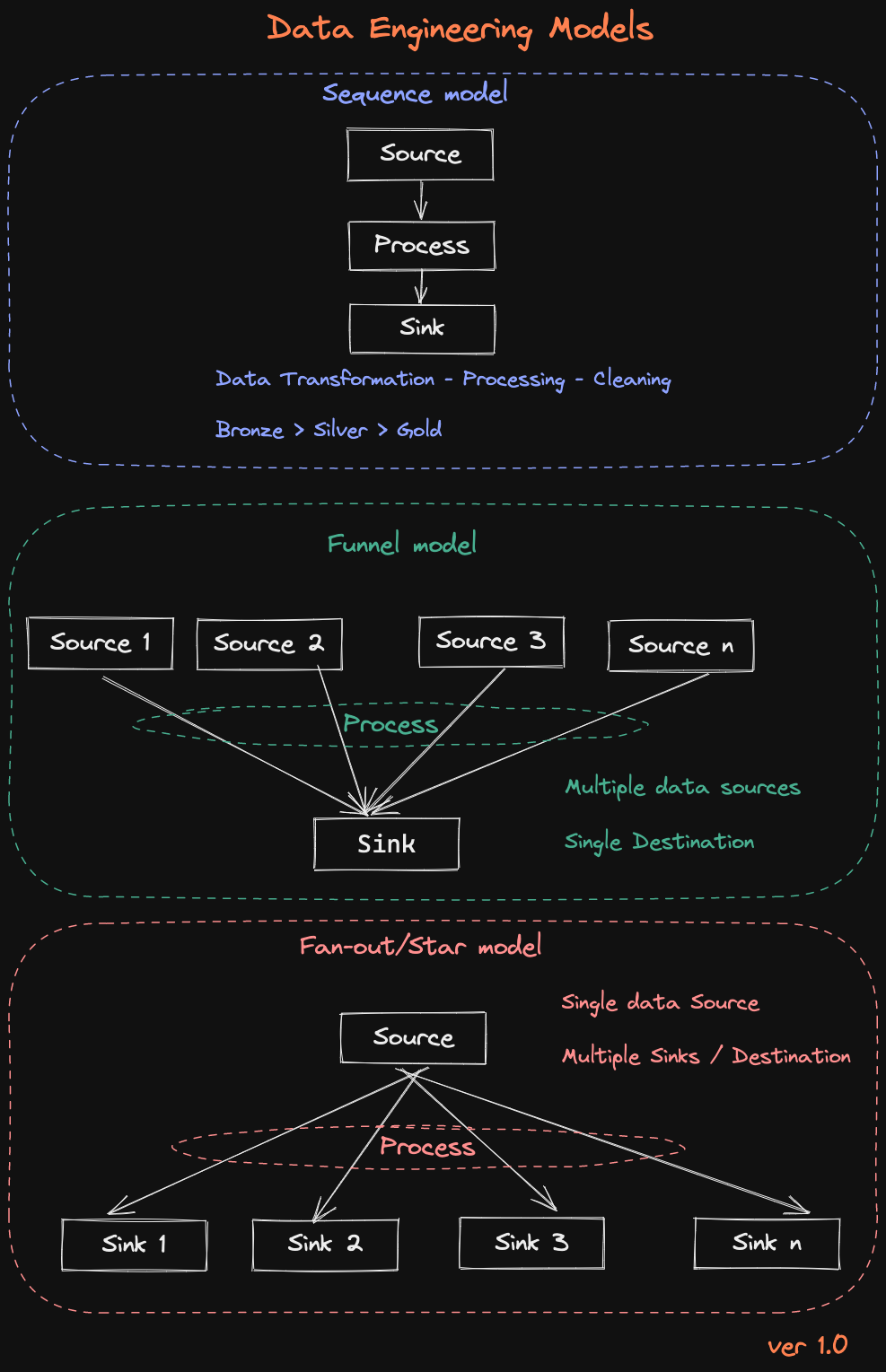

The Sequence Model represents a linear flow where data moves from a Source to a Process stage and finally to a Sink. This model is ideal for straightforward data transformations and processing tasks, where data is ingested, cleaned, and transformed sequentially before being stored in the final destination. This approach aligns with the traditional Bronze → Silver → Gold layer strategy in data engineering.

Funnel Model

After processing, the Funnel Model aggregates data from multiple sources into a single Sink. This model is useful when combining data from various origins, such as multiple databases or external APIs, into a unified repository. The central processing stage consolidates and harmonizes data from these different sources before loading it into the destination, ensuring that data from diverse inputs is integrated into one output.

Fan-out/Star Model

The Fan-out/Star Model starts with a single Source, processes the data, and then distributes the results to multiple Sinks. This model is effective when the processed data needs to be utilized in various downstream applications or systems. It allows the same source data to be transformed and delivered to different destinations, each serving a unique purpose or system requirement.

Each model serves distinct data engineering needs, from simple data pipelines to complex ETL processes involving multiple sources or destinations.