Disclaimer

This is not an official Textbook for Data Warehouse.

This is only a reference material with the lecture presented in the class.

If you find any errors, please do email me at chandr34 @ rowan.edu

Fundamentals

- Terms to Know

- Jobs

- Skills needed for DW developer

- Application Tiers

- Operational Database

- What is a Data Warehouse

- Types of Data

- Data Storage Systems

- Data Warehouse 1980 - Current

- Data Warehouse vs Data Mart

- Data Warehouse Architecture

- Data Warehouse Characteristic

- Tools

- Cloud vs On-Premise

- Steps to design a Data Warehouse

Terms to Know

These are some basic terms to know. We will learn lot more going forward.

DBMS - Database Management System

RDBMS - Relational Database Management System

ETL - Extract Transform Load - Back office process for loading data to Data Warehouse.

Bill Inmon - Considered the Father of Data Warehousing.

Ralph Kimball - He’s the father of Dimensional modeling and the Star Schema.

OLTP - Online Transaction Processing. The classic operational database for taking orders, reservations, etc.

OLAP - Online Analytical Processing. Big database providers (IBM, Teradata, Microsoft.. ) started integrating OLAP into their systems.

MetaData - Data about Data.

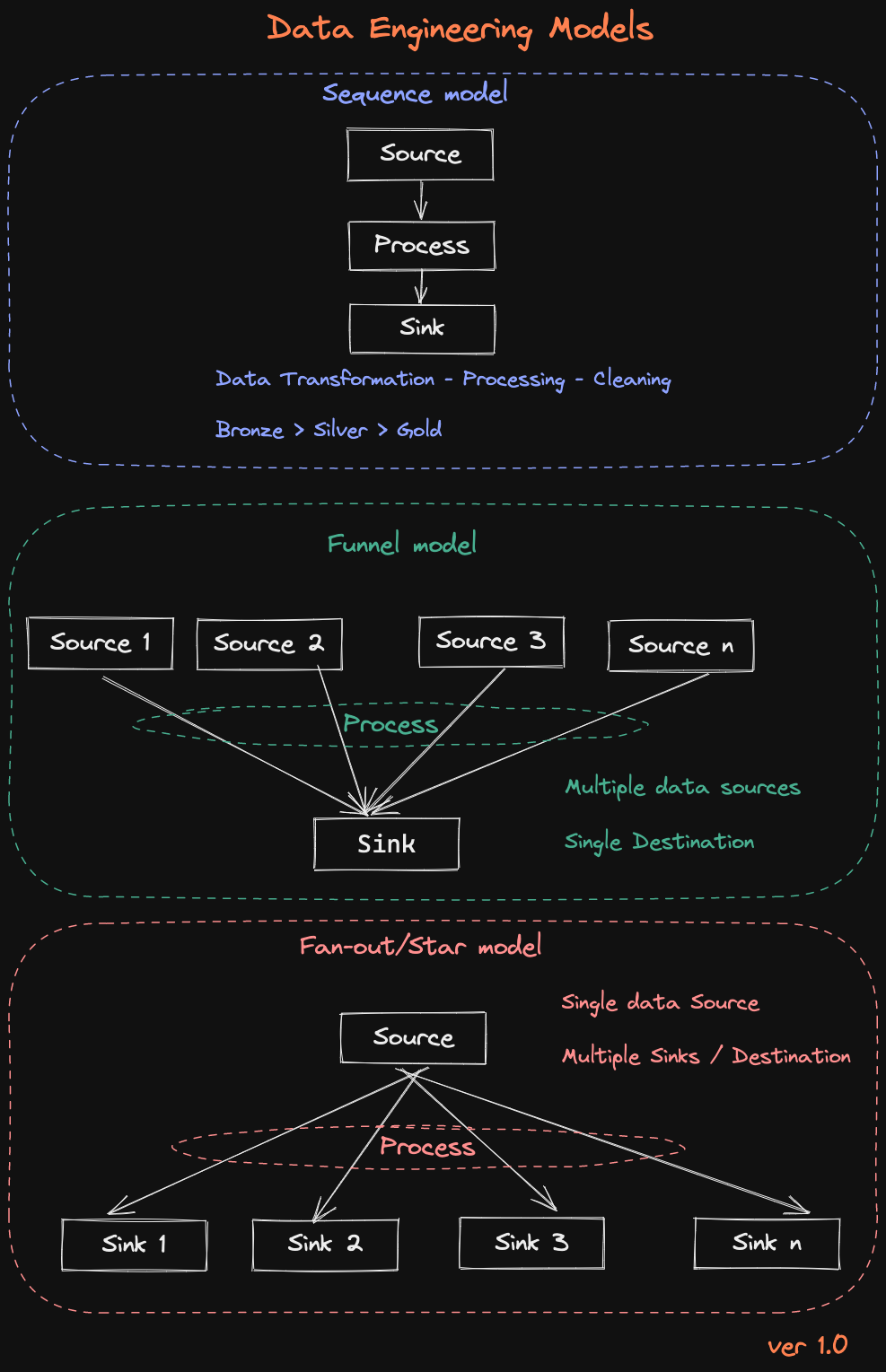

Data Pipeline - A set of processes that move data from one system to another.

ETL - Extract Transform Load

ELT - Extract Load Transform

Jobs

-

Data Analyst: Plays a crucial role in business decision-making by analyzing data and providing valuable insights, often sourced from a data warehouse.

-

Data Scientist: Employs data warehousing as a powerful tool for modeling, statistical analysis, and predictive analytics, enabling the resolution of complex problems.

-

Data Engineer: Demonstrates precision and dedication in their work by focusing on the design, construction, and maintenance of data pipelines that facilitate the movement of data into and out of a data warehouse.

-

Analyst Engineer: A hybrid role combining the skills of a data analyst and data engineer, often involved in analyzing data and developing the infrastructure to support that analysis.

-

Data Architect: Designs and oversees the implementation of the overall data infrastructure, including data warehouses, to ensure scalability and efficiency.

-

Database Administrator (DBA): This person manages the performance, integrity, and security of databases, including those used in data warehouses.

-

Database Security Analyst: This position focuses on ensuring the security of databases and data warehouses and protecting against threats and vulnerabilities.

-

Database Manager: Oversees the overall management and administration of databases, including those used for data warehousing.

-

Business Intelligence (BI) Analyst: Utilizes data from a data warehouse to generate reports, dashboards, and visualizations that aid business decision-making.

-

AI/ML Engineer: Uses warehouse data to build and deploy machine learning models, particularly in enterprise environments where historical data is crucial.

-

Compliance Analyst: Ensures that data warehousing solutions meet regulatory requirements, especially in industries like finance, healthcare, or insurance.

-

Chief Data Officer (CDO): An executive role responsible for an organization’s data strategy, often overseeing data warehousing as a critical component.

-

Data Doctor: Typically diagnoses and "fixes" data-related issues within an organization. This role might involve data cleansing, ensuring data quality, and resolving inconsistencies or errors in datasets.

-

Data Advocate: Champions the use of data within an organization. They promote data-driven decision-making and ensure that the value of data is recognized and utilized effectively across different departments.

Prefix with Cloud & Big Data

Skills needed

A data warehouse developer is responsible for designing, developing, and maintaining data warehouse systems. To be qualified as a data warehouse developer, a person should possess a combination of technical skills and knowledge in the following areas:

Must-have skills

-

Database Management Systems (DBMS): A strong understanding of relational and analytical database management systems such as Oracle, SQL Server, PostgreSQL, or Teradata.

-

SQL: Proficiency in SQL (Structured Query Language) for creating, querying, and manipulating database objects.

-

Data Modeling: Knowledge of data modeling techniques, including dimensional modeling (star schema, snowflake schema), normalization, and denormalization. Familiarity with tools such as Vertabelo or ERwin, or PowerDesigner is a plus.

-

ETL (Extract, Transform, Load): Experience with ETL processes and tools like Microsoft SQL Server Integration Services (SSIS), Talend, or Informatica PowerCenter for extracting, transforming, and loading data from various sources into the data warehouse.

-

Data Integration: Understanding of data integration concepts and techniques, such as data mapping, data cleansing, and data transformation.

-

Data Quality: Knowledge of data quality management and techniques to ensure data accuracy, consistency, and integrity in the data warehouse.

-

Performance Tuning: Familiarity with performance optimization techniques for data warehouses, such as indexing, partitioning, and materialized views.

-

Reporting and Data Visualization: Experience with reporting and data visualization tools like Tableau, Power BI, or QlikView for creating dashboards, reports, and visualizations to analyze and present data.

-

Big Data Technologies: Familiarity with big data platforms such as Spark and NoSQL databases like MongoDB or Cassandra can be beneficial, as some organizations incorporate these technologies into their data warehousing solutions.

-

Programming Languages: Knowledge of programming languages like Python, Java, or C# can help implement custom data processing logic or integrate with external systems.

-

Cloud Platforms: Experience with cloud-based data warehousing solutions such as Databricks can be a plus as more organizations move their data warehouses to the cloud.

-

Version Control: Familiarity with version control systems like Git or SVN for managing code and collaborating with other developers.

Nice to have skills

In summary, while Linux skills are not a core requirement for a data warehouse developer, they can be valuable for managing, optimizing, and troubleshooting your data warehousing environment.

- Server Management

- Scripting and Automation (AWK, Bash)

- File System and Storage Management

- Networking and Security

- Performance Tuning

- Working with Cloud Platforms

- Deploying and Managing Containers (Docker, Podman, Kubernetes)

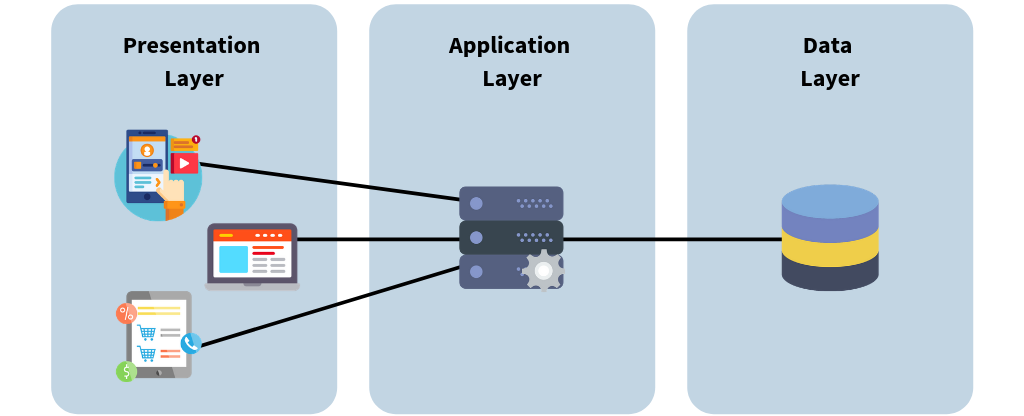

Application Tiers

Where does Database fit in?

- Database Tier: Actual data

- Application Tier: Business logic

- Presentation Tier: Front end (Web, Client, Mobile App)

https://bluzelle.com/blog/things-you-should-know-about-database-caching

Trending Technologies

- Robotics

- AI

- IoT

- Blockchain

- 3D Printing

- Internet / Mobile Apps

- Autonomous Cars - VANET Routing

- VR / AR - Virtual Reality / Augmented Reality

- Wireless Services

- Quantum Computing

- 5G

- Voice Assistant (Siri, Alexa, Google Home)

- Cyber Security

- Big Data Analytics

- Machine Learning

- DevOps

- NoSQL Databases

- Microservices Architecture

- Fintech

- Smart Cities

- E-commerce Platforms

- HealthTech

Do you see any pattern or anything common in these?

Visual Interface & Data

Operational Database

An operational database management system is software that allows users to quickly define, modify, retrieve, and manage data in real time.

While conventional databases rely on batch processing, operational database systems are oriented toward real-time, transactional operations.

Let's take a Retail company that uses several systems for its day-to-day operations.

They buy software from various vendors and manage their business.

- Sales Transactions

- Inventory Management

- Customer Relationship Management

- HR Systems

and so on.

Other Examples:

- Banner Database (Registration)

- eCommerce Database

- Blog Database

- Banking Transactions

What is a Data Warehouse

- Is it a Database?

- Is it Big Data?

- Is it the backend for Visualization?

Yes! Yes! & Yes!

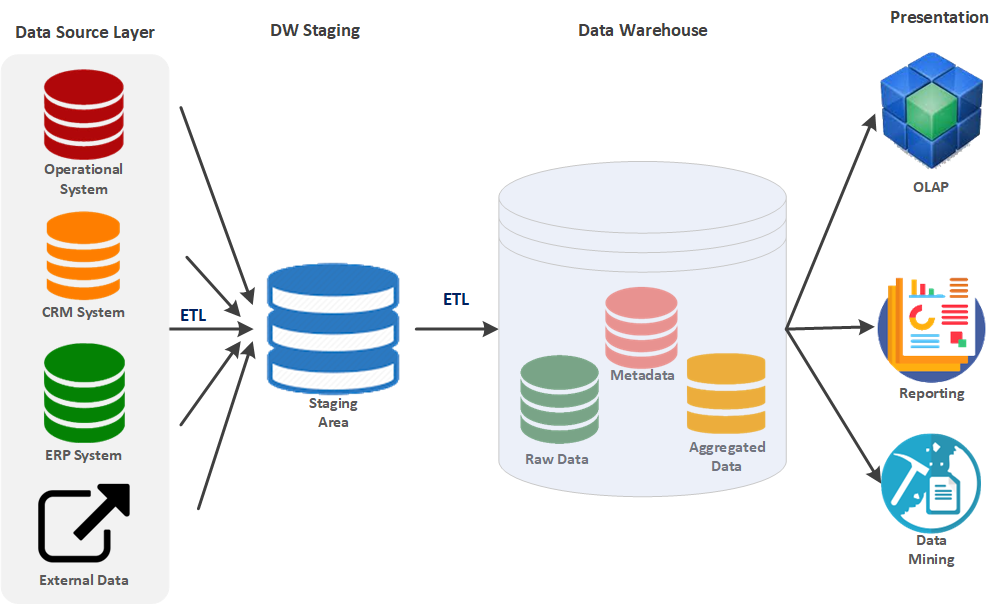

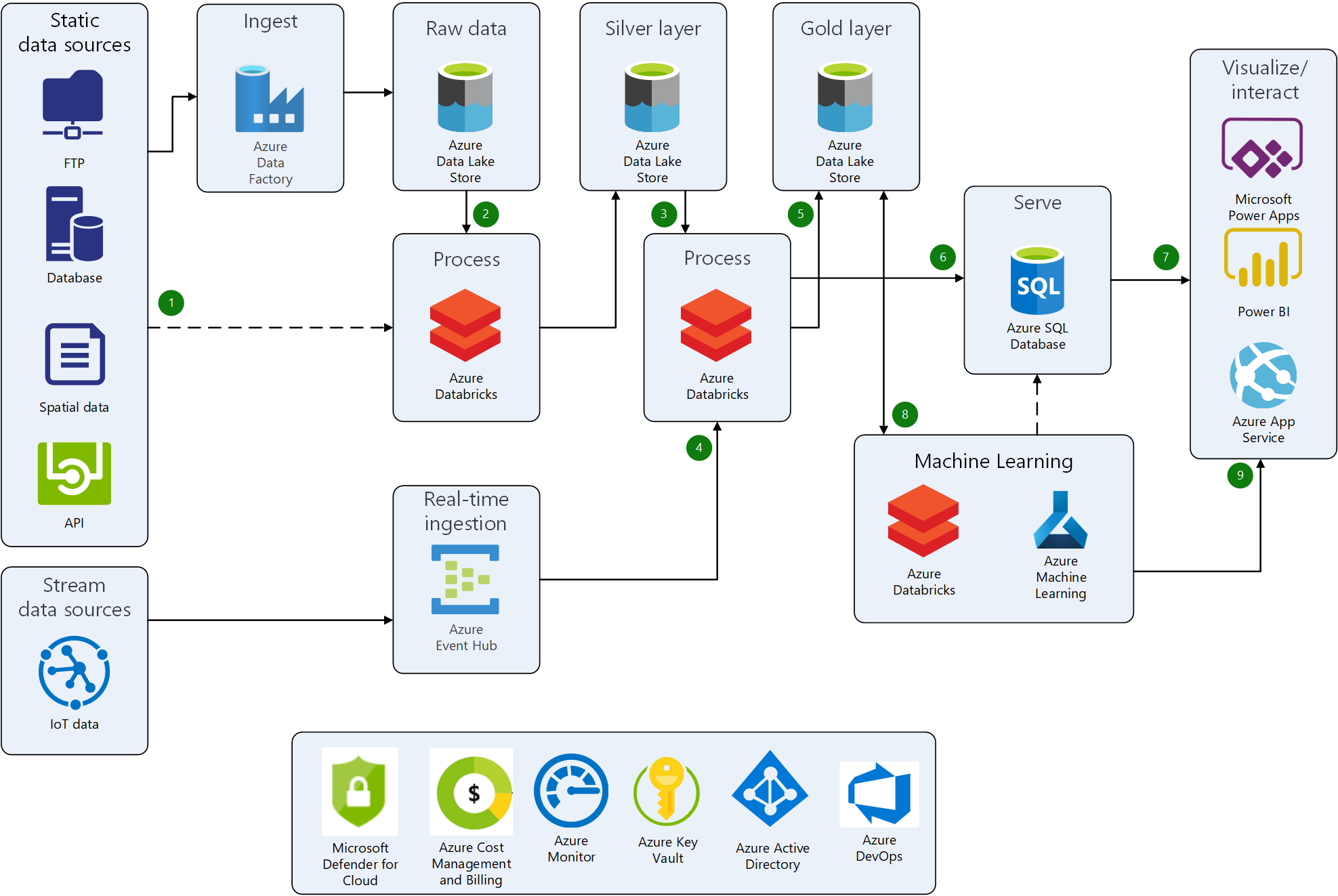

Typical Data Architecture

Data Source Layer

Operational System: This includes data from various operational systems like transactional databases. CRM System: Data from customer relationship management systems. ERP System: Data from enterprise resource planning systems. External Data: Data that might come from external sources outside the organization. These sources feed data into the Data Warehouse system. Depending on the source, the data might be structured or unstructured.

DW Staging

Staging Area: This is an intermediate storage area used for data processing during the ETL (Extract, Transform, Load) process. ETL Process: Extract: Data is extracted from various data sources. Transform: The extracted data is transformed into a format suitable for analysis and reporting. This might include cleaning, normalizing, and aggregating the data. Load: The transformed data is then loaded into the Data Warehouse.

Data Warehouse

Raw Data: The unprocessed data loaded directly from the staging area. Metadata: Data about the data, which includes information on data definitions, structures, and rules. Aggregated Data: Summarizing or aggregating data for efficient querying and analysis.

Presentation Layer

**OLAP (Online Analytical Processing): **This tool is used for multidimensional data analysis in the warehouse, enabling users to analyze data from various perspectives. Reporting: Involves generating reports from the data warehouse, often for decision-making and business intelligence purposes. Data Mining: This involves analyzing large datasets to identify patterns, trends, and insights, often used for predictive analytics.

Flow of Data

Data Source Layer → Staging Area: Data is extracted from multiple sources and brought into the staging area. Staging Area → Data Warehouse: The data is transformed and loaded into the data warehouse. Data Warehouse → Presentation Layer: The data is then used for various purposes, such as OLAP, Reporting, and Data Mining.

This architecture ensures that data is collected, processed, and made available for analysis in a structured and efficient manner, facilitating business intelligence and decision-making processes.

Problem Statement

RetailWorld uses different systems for sales transactions, inventory management, customer relationship management (CRM), and human resources (HR). Each system generates a vast amount of data daily.

The company's management wants to make data-driven decisions to improve its operations, optimize its supply chain, and enhance customer satisfaction. However, they face the following challenges:

-

Data Silos: Data is stored in separate systems, making gathering and analyzing information from multiple sources challenging.

-

Inconsistent Data: Different systems use varying data formats, making it hard to consolidate and standardize the data for analysis.

-

Slow Query Performance: As the volume of data grows, querying the operational databases directly becomes slower and impacts the performance of the transactional systems.

-

Limited Historical Data: Operational databases are optimized for current transactions, making storing and analyzing historical data challenging.

Solution

-

Centralized Data Repository: The Data Warehouse consolidates data from multiple sources, breaking down data silos and enabling a unified view of the company's information.

-

Consistent Data Format: Data is cleaned, transformed, and standardized to ensure consistency and accuracy across the organization.

-

Improved Query Performance: The Data Warehouse is optimized for analytical processing, allowing faster query performance without impacting the operational systems.

-

Historical Data Storage: The Data Warehouse can store and manage large volumes of historical data, enabling trend analysis and long-term decision-making.

-

Enhanced Reporting and Analysis: The Data Warehouse simplifies the process of generating reports and conducting in-depth analyses, providing insights into sales trends, customer preferences, inventory levels, and employee performance.

Key Features

- They are used for storing historical data.

- Low Latency / Response time is fast.

- Data consistency and quality of data.

- Used with Business Intelligence, Data Analysis & Data Science.

By doing all the above, it answers some of the questions the business/needs.

- Sales of particular items this month compared to last month?

- Top 3 best-selling products of the quarter?

- How is the internet traffic before the pandemic / during the pandemic?

It's read-only for end-users and upper management.

Who are the end users?

- Data Analysts

- Data Scientists.

Data Size

A query can range from a few thousand rows to billion rows.

Need for Data Warehouse

Amazon CEO wants to know the sales of the new Kindle Scribe reader they launched this year compared to other eReaders in the next 30 mins.

Where do we look for this info?

- Transactional databases use every resource to serve customers. Querying on them may slow down a customer's request.

- Also, they are Live databases. So they may or may not have historical data.

- Chances are data format may differ for each region/country in which Amazon is doing business in.

It's not just Amazon; it's everywhere.

- Airlines

- Bank Transactions / ATM transactions

- Restaurant chains, and so on.

Companies will have multiple databases that are not linked with each other for specific reasons.

- Sales Database

- Customer Service Database

- Marketing Database

- Inventory Database

- Human Resources Database

There is no reason for linking HR data to Sales data, but the CFO might need this info for budgeting.

Fun Qn: How many times have you received Marketing mail/email from the same company you have an account with?

Current State of the Art

The business world decided as follows.

-

A Database should exist just for doing BI & Strategic reports.

-

It should be separated from the operational / transaction database for the day-to-day running of the business.

-

It should encompass all aspects of the business (sales, inventory, hr, customer service…)

-

An enterprise-wide standard definition for every field name in every table.

- Example: employee number should be identical across DB. empNo, eNo,EmployeeNum.. empID not acceptable.

-

Metadata database (data about data) defining assumptions about each field, describing transformations performed and cleansing operations, etc.

- Example: If US telephone, it should be nnn-nnn-nnnn or (nnn) nnn-nnnn

-

Data Warehouse is read-only to its end users so that everyone will use the same data, and there will be no mismatch between teams.

-

Fast access, even if it's big data.

How its done?

-

Operational databases for tracking sales, inventory, support calls, chat, and email. (Relational / NoSQL)

-

The Back Office team (ETL team) gathers data from multiple sources, cleans it, transforms it, massages the missing, and stores it in the Staging database.

- If the phone number is not in the format, then format it.

- If the email address is not linked to the chat/phone record, read it from the Customer and update it.

-

Staging database: Working database where all the work is done to the data. It then dumps to the data warehouse, which is visible as “read-only” to end users.

-

Data Analysts then build reports using Data Warehouse.

Back to the original question.

If all of these things are done right, Amazon's CEO can get the report in less than 30 minutes without interfering with business operations. 👍

Types of Data

- Structured Data (rows/columns CSV, Excel)

- Semi-Structured Data (JSON / XML)

- Unstructured Data (Video, Audio, Document, Email)

Structured Data

| ID | Name | Join Date |

|---|---|---|

| 101 | Rachel Green | 2020-05-01 |

| 201 | Joey Tribianni | 1998-07-05 |

| 301 | Monica Geller | 1999-12-14 |

| 401 | Cosmo Kramer | 2001-06-05 |

Semi-Structured Data

JSON

[

{

"id":1,

"name":"Rachel Green",

"gender":"F",

"series":"Friends"

},

{

"id":"2",

"name":"Sheldon Cooper",

"gender":"M",

"series":"BBT"

}

]

XML

<?xml version="1.0" encoding="UTF-8"?>

<actors>

<actor>

<id>1</id>

<name>Rachel Green</name>

<gender>F</gender>

<series>Friends</series>

</actor>

<actor>

<id>2</id>

<name>Sheldon Cooper</name>

<gender>M</gender>

<series>BBT</series>

</actor>

</actors>

Unstructured Data

- Text Logs: Server logs, application logs.

- Social Media Posts: Tweets, Facebook comments.

- Emails: Customer support interactions.

- Audio/Video: Customer call recordings and marketing videos.

- Customer Reviews: Free-form text reviews.

- Images: Product images user profile pictures.

- Documents: PDFs, Word files.

- Sensor Data: IoT data streams.

These can be ingested into modern data warehouses for analytics, often after some preprocessing. For instance, text can be analyzed with NLP before storing, or images can be processed into feature vectors.

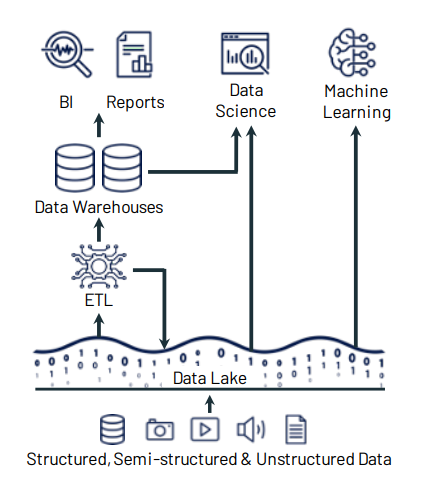

Data Storage Systems

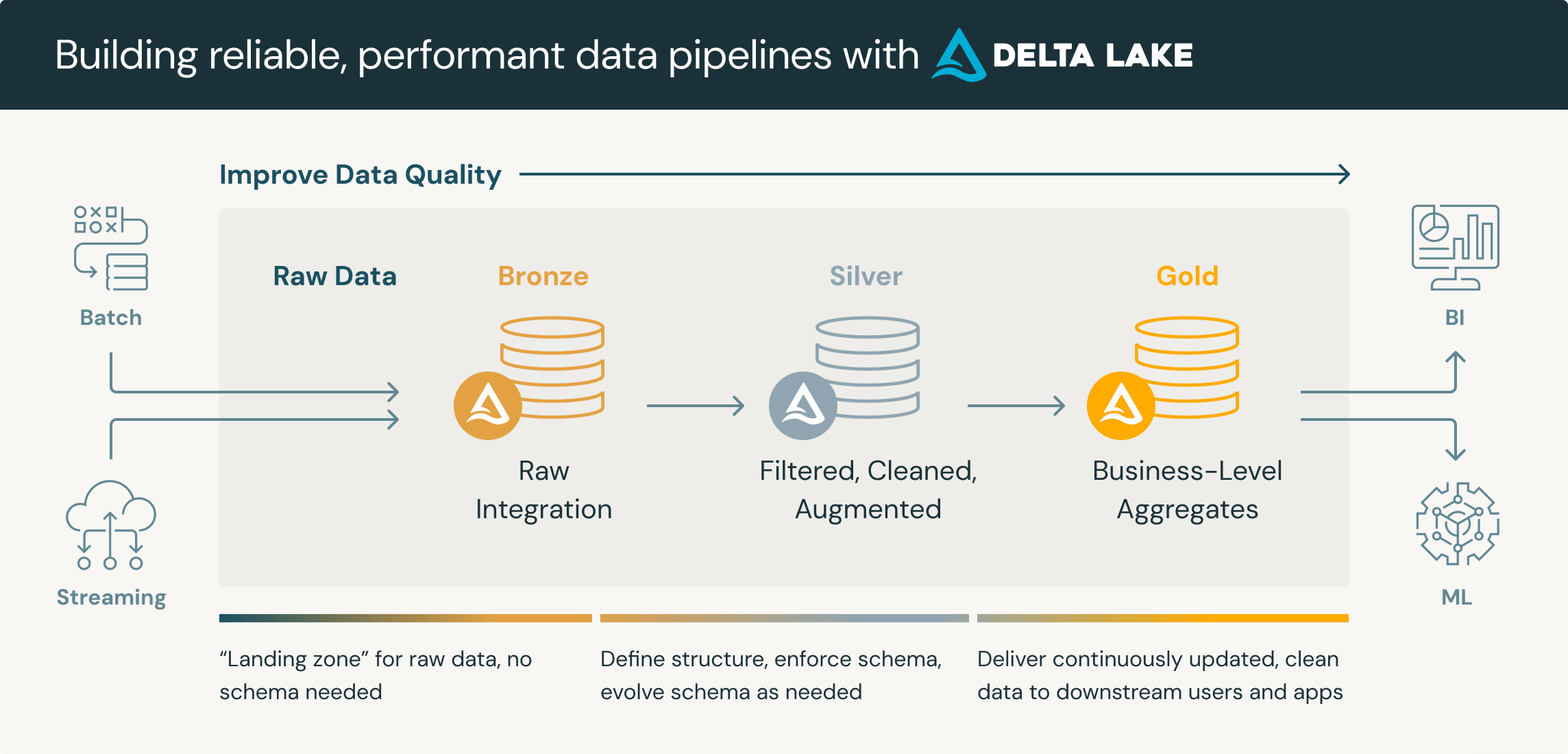

Data Lake

A place where you dump all forms of data of your business.

Structured / Un Structured / Semi-Structured.

Example

- Customer service chat logs, voice recordings, email, website comments, social media.

- Need a cheap way to store different types of data in large quantities.

- Data is not needed now, but planning to use it for later use.

- Larger organizations need all kinds of data to analyze and improve business.

Data Warehouse

- Data Warehouse - Stores already modeled/structured data and ready for use.

- Data from the Warehouse can be used for analyzing its operational data.

- There will be developers to support the data.

- It’s multi-purpose storage for different use cases.

Data Mart

A subset of Data Warehouse for a specific use case.

A specific group of users uses it, so it is more secure and performs better.

Example: Pandemic Analysis

Dependent Data Marts - constructed from an existing data warehouse.

Example: Grocery / School Supplies

Independent Data Marts - built from scratch and operated in silos.

Example: Mask / Glove Sales

Hybrid Data Marts - Mix and match both.

Data Warehouse 1980 - Current

Data Warehouses (1980 - 2000):

Pros

- High Quality Data.

- Standard modeling technique (star schema/Kimball).

- Reliability through ACID transactions.

- Very good fit for business intelligence.

Cons

- Closed Formats.

- Support only SQL.

- No support for Machine Learning.

- No streaming support.

- Limited scaling support.

Data Lakes (2010 - 2020)

Pros

- Support for open formats.

- Can support all data types & their use cases.

- Scalability through underlying cloud storage.

- Support for Machine Learning & AI.

Cons

- Weak schema support.

- No ACID transaction support.

- Low data quality.

- Leads to "Data Swamps".

Lakehouses (2020 and beyond):

Pros

- Support for both BI and ML/AI workloads.

- Standard Storage Format.

- Reliability through ACID transactions.

- Scalability through underlying cloud storage.

Cons

- Cost Considerations.

- Data Governance and Security.

- Performance Overhead. (Due to ACID transactions)

Data Warehouse vs Data Mart

| Data Warehouse | Data Mart |

|---|---|

| Independent application / system | Specific to support one system. |

| Contains detailed data. | Mostly aggregated data. |

| Involves top-down/bottom-up approach. | Involves bottom-up approach. |

| Adjustable and exists for an extended period of time. | Restricted for a project / shorter duration of time. |

Data Warehouse Architecture

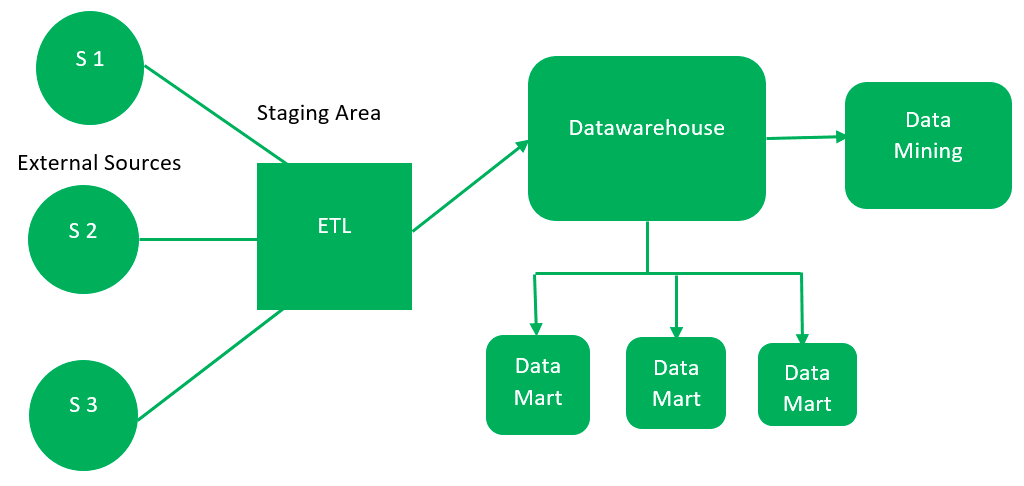

Top-Down Approach

This method begins with developing a comprehensive enterprise data warehouse (EDW) consolidating all organizational data. This central warehouse creates data marts to serve specific business units or functions. The top-down approach ensures a unified, consistent data model across the enterprise but typically requires more upfront investment in time and resources.

External Sources (ETL) >> Data Warehouse >> Data Mining

>> Data Mart 1

>> Data Mart 2

In the words of Inmon

"Data Warehouse as a central repository for the complete organization and data marts are created from it after the complete data warehouse has been created."

src: https://www.geeksforgeeks.org/data-warehouse-architecture/

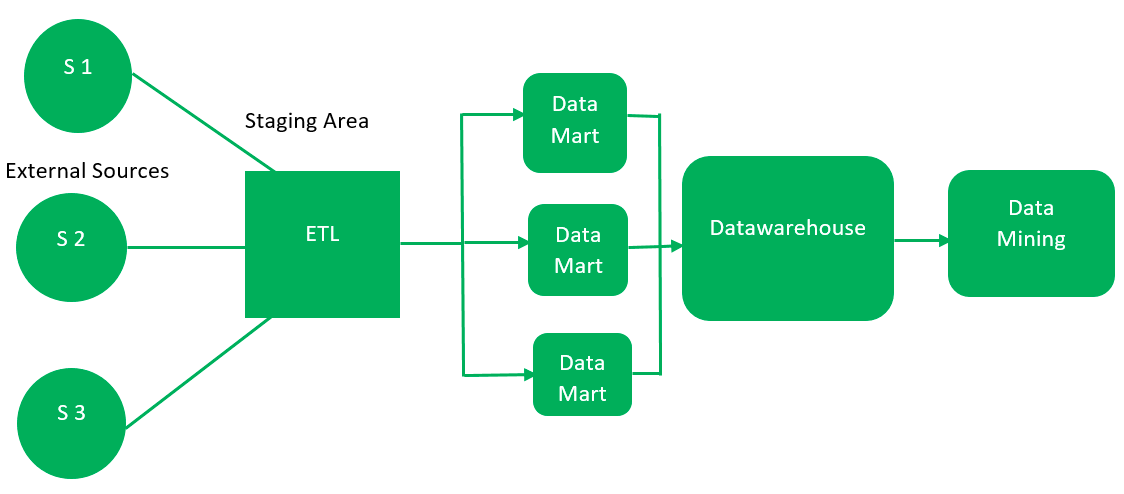

Bottom-Up Approach

This approach starts by creating small, specific data marts for individual business units. These data marts are designed to meet the immediate analytical needs of departments. Over time, these marts are integrated into a comprehensive enterprise data warehouse (EDW). The bottom-up approach is agile and allows quick wins but can lead to challenges integrating data marts into a cohesive system.

External Sources (ETL) >> Data Mart 1 >> Data Warehouse >> Data Mining

>> Data Mart 2

Kimball gives this approach: data marts are created first and provide a thin view for analysis, and a data warehouse is created after complete data marts have been created.

src: https://www.geeksforgeeks.org/data-warehouse-architecture/

Summary

Each approach has its advantages and trade-offs, with the bottom-up being more iterative and flexible, while the top-down offers a more structured and holistic view of the organization’s data.

Examples:

Top Down - Popular big retail stores likely to follow this architecture. As they have build a centralized data warehouse that feeds their stores. Similarly Financial organlizations like Banks may take top-down approach.

Bottom Up - Popular OTT have such models. Initially bring movies, then add their own production and add third party providers.

Data Warehouse Characteristic

Characteristics

- Subject Oriented

- Integrated

- Time-Variant

- Non-Volatile

- Data Loading (ETL)

- Data Access (Reporting / BI)

Functions

- Data Consolidation

- Data Cleaning

- Data Integration

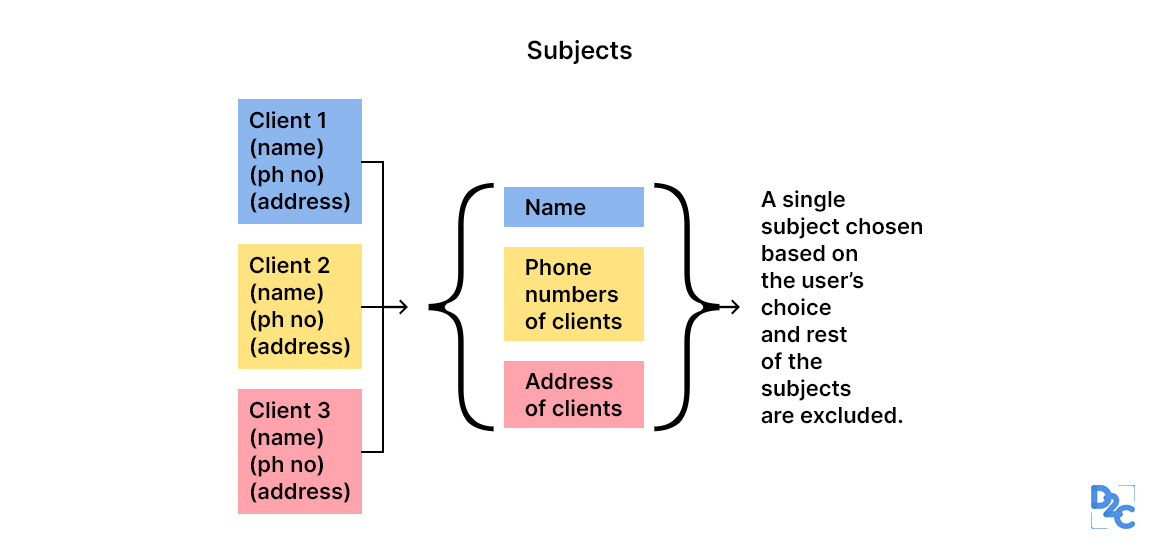

Subject Oriented

src: https://unstop.com/blog/characteristics-of-data-warehouse

Data analysis for a business's decision-makers can be done quickly by constricting to a particular subject area of the Data warehouse.

Do not add unwanted info on subjects for decision-making.

When analyzing customer information, it's crucial to focus on the relevant data and avoid unnecessary details, such as food habbits, which can distract from the main task.

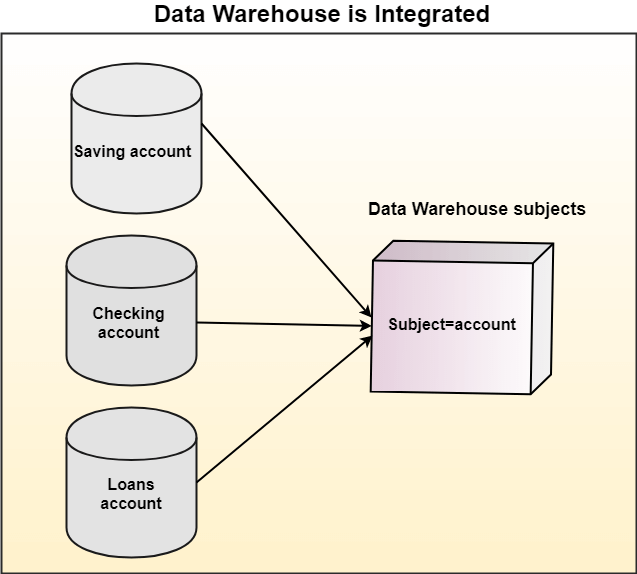

Integrated

Multiple Source Systems:

In most organizations, data is stored across various systems. For example, a bank might have separate systems for savings accounts, checking accounts, and loans. Each system is designed to serve a specific purpose and might have its own database, schema, and data formats.

Unified Subject Areas:

The data warehouse is a centralized repository where data from these different source systems is brought together. This integration is not just about storing the data in one place; it involves transforming and aligning the data to be analyzed.

Consistency and Standardization:

During integration, the data is often standardized to ensure consistency. For example, account numbers might be formatted differently in the source systems, but they are unified in a standard format in the data warehouse. This standardization is crucial for accurate reporting and analysis.

Benefits:

Holistic View: The data warehouse provides a comprehensive view of a subject by integrating data from different sources. For example, a bank can now analyze a customer's relationship across all accounts rather than looking at each in isolation.

Improved Decision-Making: With integrated data, organizations can perform more sophisticated analyses, leading to better decision-making. For example, they can understand a customer's total exposure by analyzing their savings, checking, and loan accounts together.

Efficiency: Analysts and business users can access all the relevant data in one place without needing to query multiple systems.

Time Variant

Data warehouses, unlike operational databases, are designed with a unique ability to maintain a comprehensive historical record of data. This feature not only allows for trend analysis and historical reporting but also ensures the reliability of the system for comparison over time.

For example, if a customer changes their address, a data warehouse will keep old and new addresses, along with timestamps indicating when the change occurred.

Data warehouses often include a time dimension, allowing users to analyze data across different periods. This could include daily, monthly, quarterly, or yearly trends, providing insights into how data changes over time.

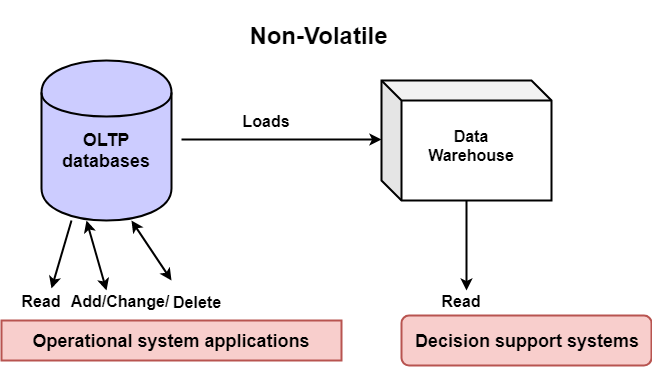

Non Volatile

Non-Volatile Nature: The data warehouse does not allow modifications to the data once it is loaded. This characteristic ensures that historical data is preserved for long-term analysis.

The cylinder on the left side of the diagram represents OLTP databases, which are typically used in operational systems. These databases handle day-to-day transactions, such as reading, adding, changing, or deleting data. OLTP systems are optimized for fast transaction processing.

The cube represents the data warehouse, a dedicated repository designed for analysis and reporting. Unlike OLTP systems, the data warehouse is non-volatile, meaning that once data is loaded into the warehouse, it remains stable and is not updated or deleted. This stability is a key feature, ensuring that historical data is preserved intact for analysis over time.

Tools

Traditional Solutions

- SQL Server

- Oracle

- PostgreSQL

- IBM DB2

- Teradata

- Informatica

- SAP HANA

Cloud Solutions

- Databricks

- Snowflake

- Microsoft Fabric

- Google BigQuery

- Amazon Redshift

ETL/ELT Tools

- Talend

- Apache NiFi

- Fivetran

- Apache Airflow (orchestrator)

Data Integration and Data Prep

- Alteryx

- Trifacta

- dbt (data build tool)

BI and Analytics Tools

- Tableau

- QlikView

- Power BI

- Looker

Data Lakes

- AWS Lake Formation

- Azure Data Lake

- Google Cloud Storage

- Apache Hadoop (HDFS)

Data Cataloging and Governance

- Unity Catalog

- Apache Atlas

- Collibra

- Alation

- Informatica Data Catalog

Big Data Technologies

- Apache Spark

- Apache Hive

- Apache Impala

- Apache HBase

- Presto

Cloud vs On-Premise

| Feature | Cloud Datawarehouse | On-Premise |

|---|---|---|

| Scalability | Instant Up / Down, Scale In / Out | Reconfiguring / purchasing hardware, software, etc. |

| Availability | Up to 99.99% | Depends on infrastructure. |

| Security | Provided by cloud provider | Depends on the competence of the in-house IT team. |

| Performance | Serve multiple geo locations, helps query performance | Scalability challenge |

| Cost-effectiveness | No hardware / initial cost. Pay only for usage. | Requires significant initial investment, salary. |

src: https://www.scnsoft.com/analytics/data-warehouse/cloud

Steps to design a Data Warehouse

- Gather Requirements

- Environment (Physical / Cloud)

- Dev

- Test

- Prod

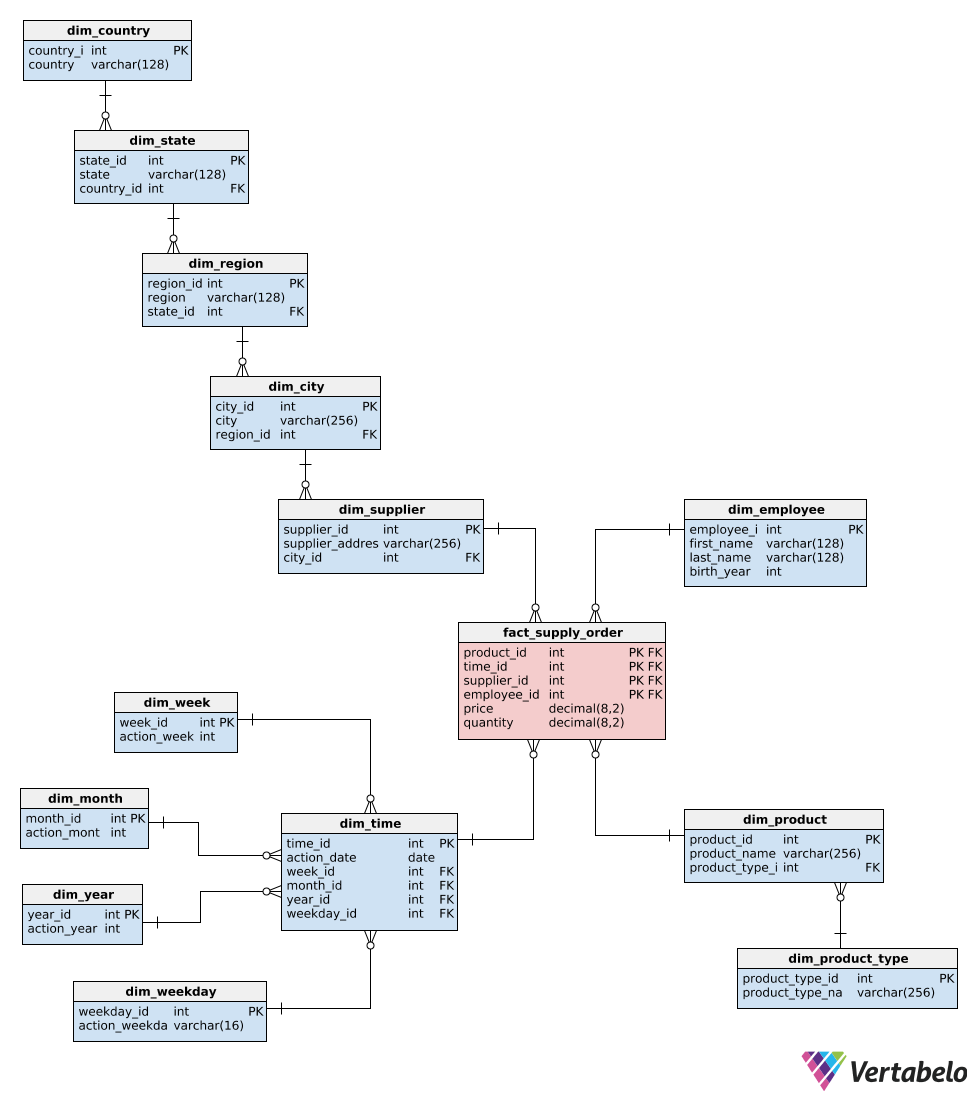

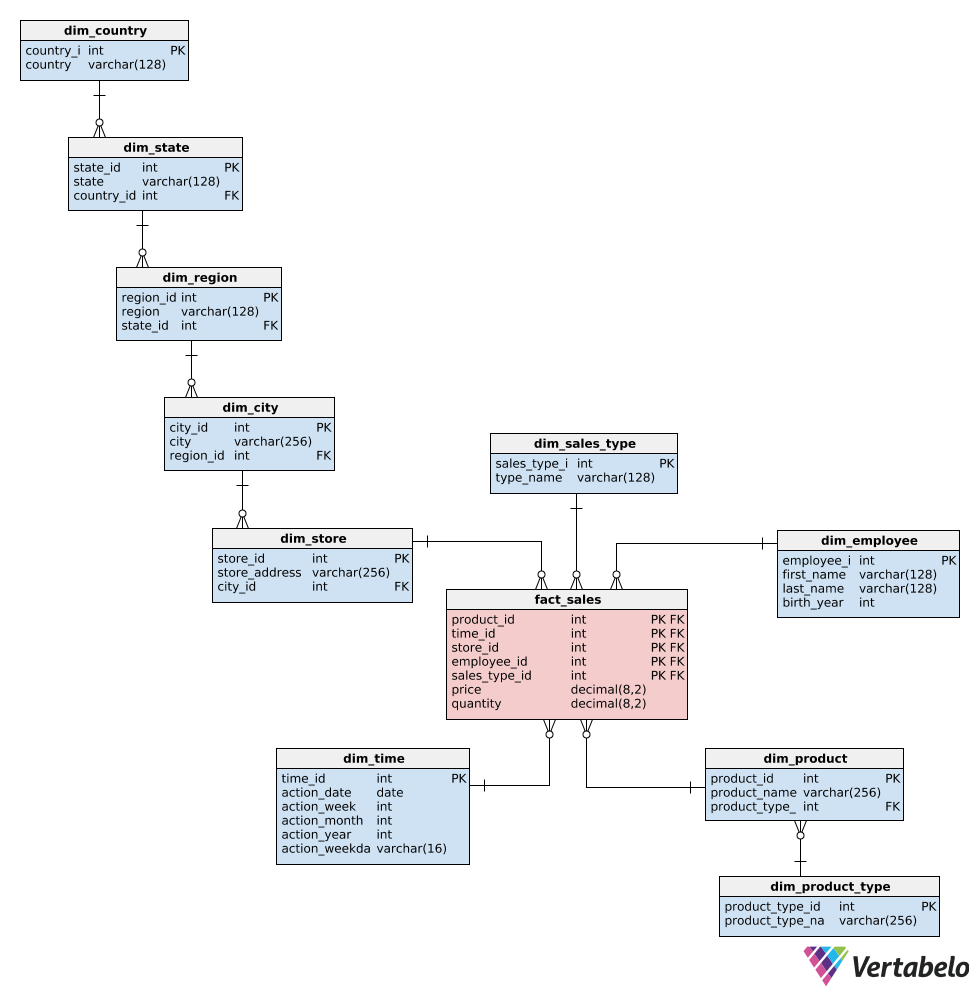

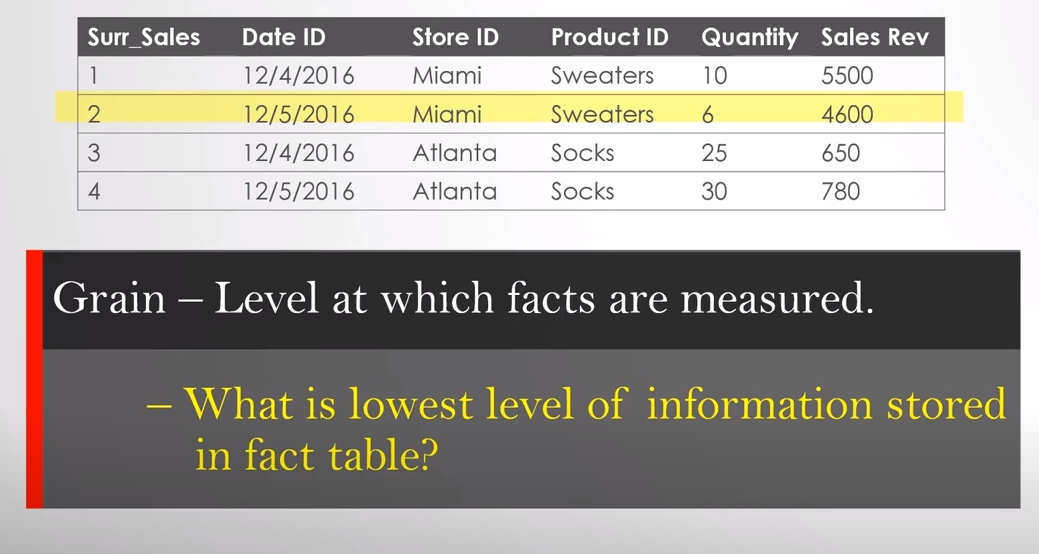

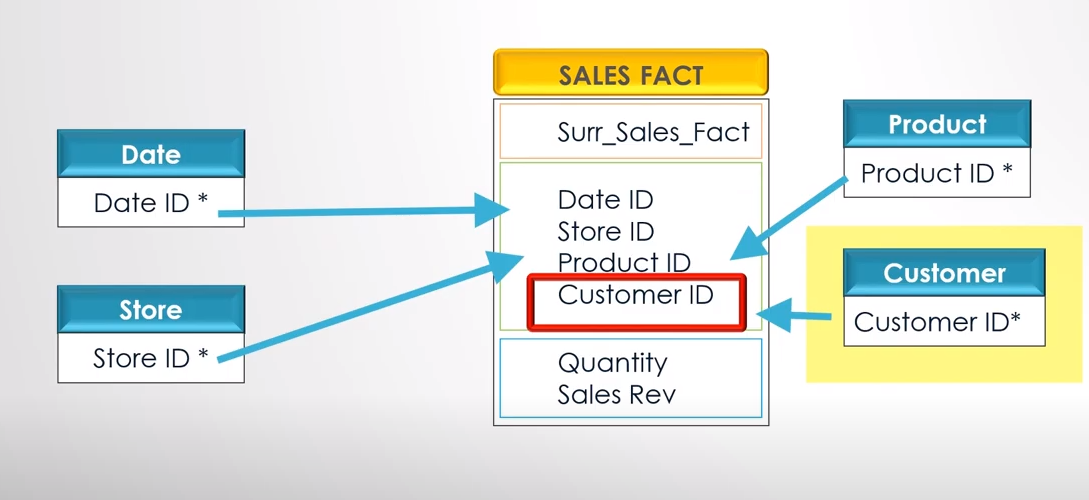

- Data Modeling

- Star Schema

- Snowflake Schema

- Galaxy Schema

- Choose ETL - ELT Solution

- OLAP Cubes or Not

- Visualization Tool

- Query Performance

Gather Requirements

-

DW is subject-oriented.

-

Needs data from all related sources. DW is valuable as the data contained within it.

-

Talk to groups and align goals with the overall project.

-

Could you determine the scope of the project and how it helps the business?

-

Discover future needs with the data and technology solution.

-

Disaster Recovery model.

-

Security (threat detection, mitigation, monitoring)

-

Anticipate compliance needs and mitigate regulatory risks.

Environment

-

Need separate environments for Development, Testing, and Production.

-

Development & Testing will have some % of sample data from Production.

-

Testing and Production will have a similar HW environment. (Cloud / In house)

-

Nice to have a similar environment for development.

-

Track changes, indexes, and query changes are done in the lower environment.

-

DR environment is part of the Production release.

-

Suppose it's in-house; deploy it in different data centers. If it's cloud, deploy it in different regions.

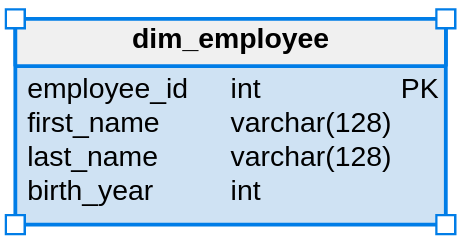

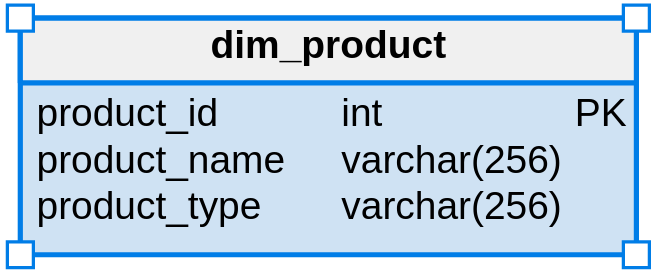

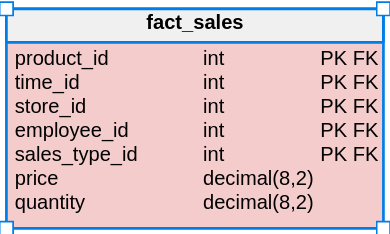

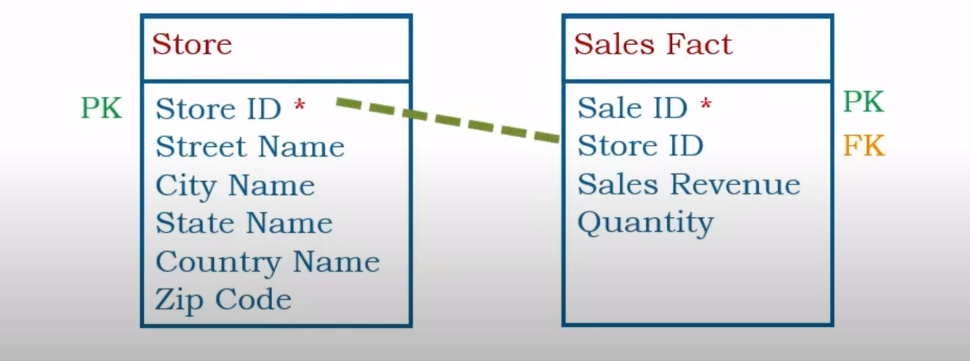

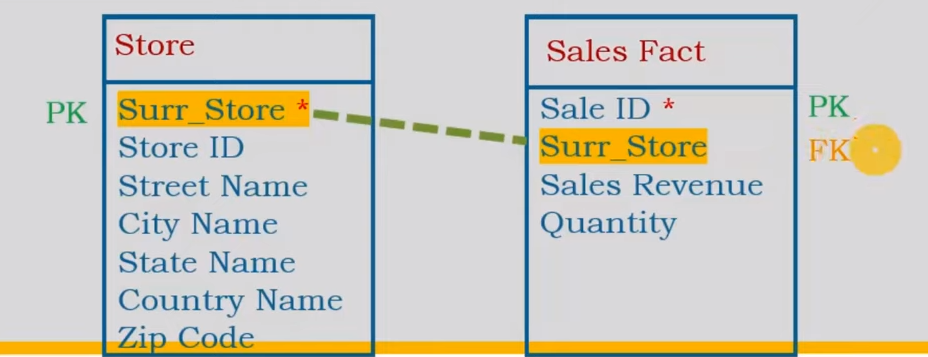

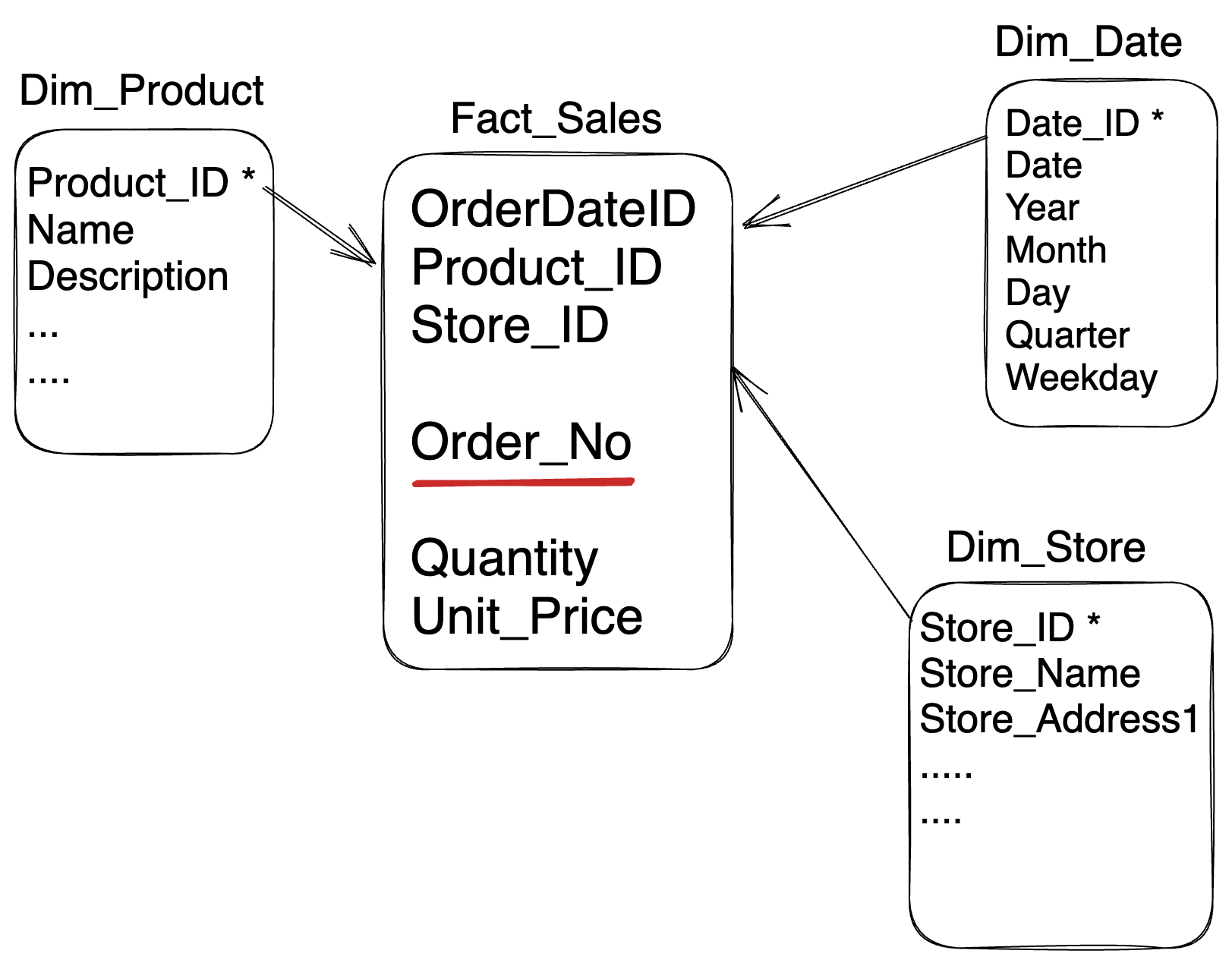

Data Modeling

-

Data Modeling is a process to visualize the data warehouse.

-

It helps to set standards in naming conventions, creating relationships between datasets, and establishing compliance and security.

-

Most Complex phase in data warehouse design.

-

Recollect Top - Down vs. Bottom - Up that decision plays a vital role.

-

Data Modelling typically starts at the data mart level and then branches out to the data warehouse.

-

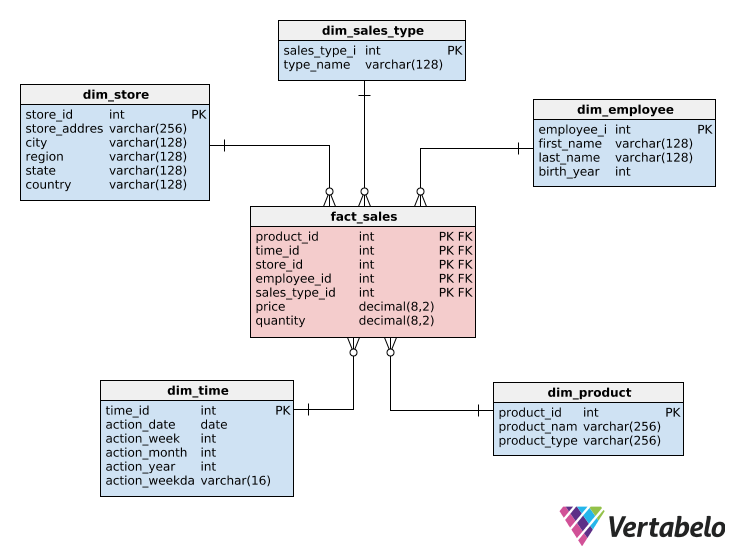

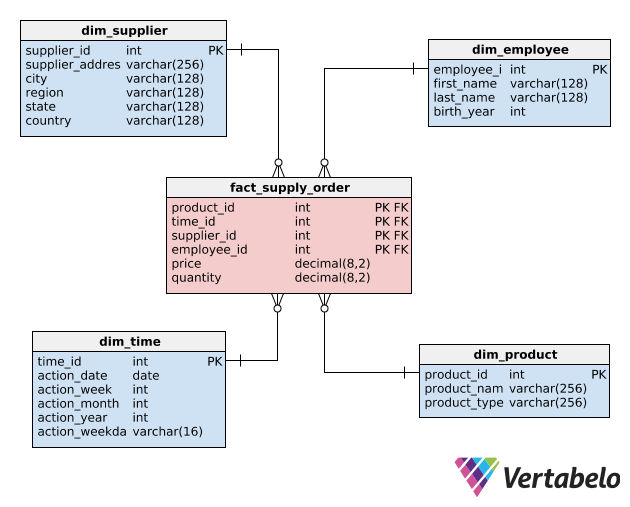

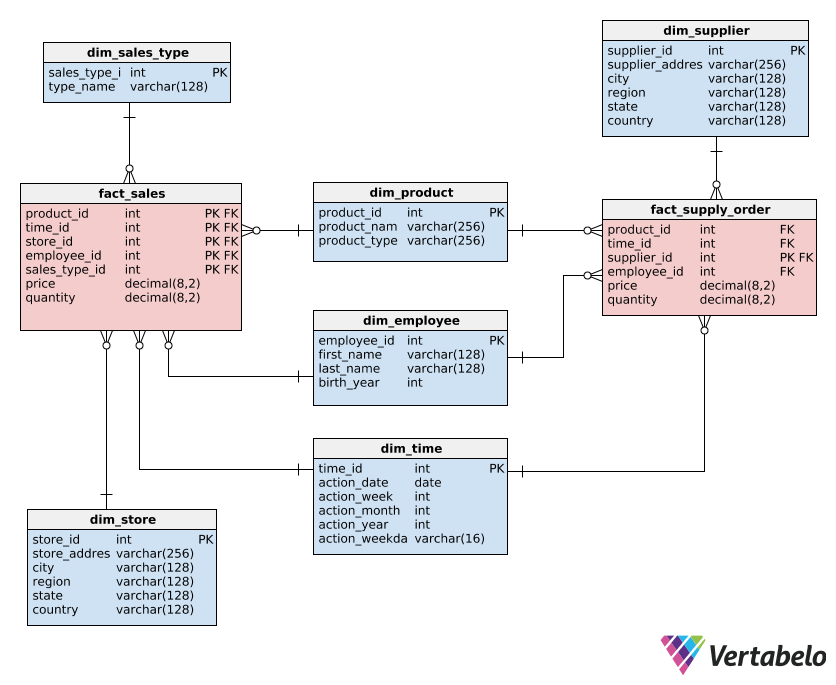

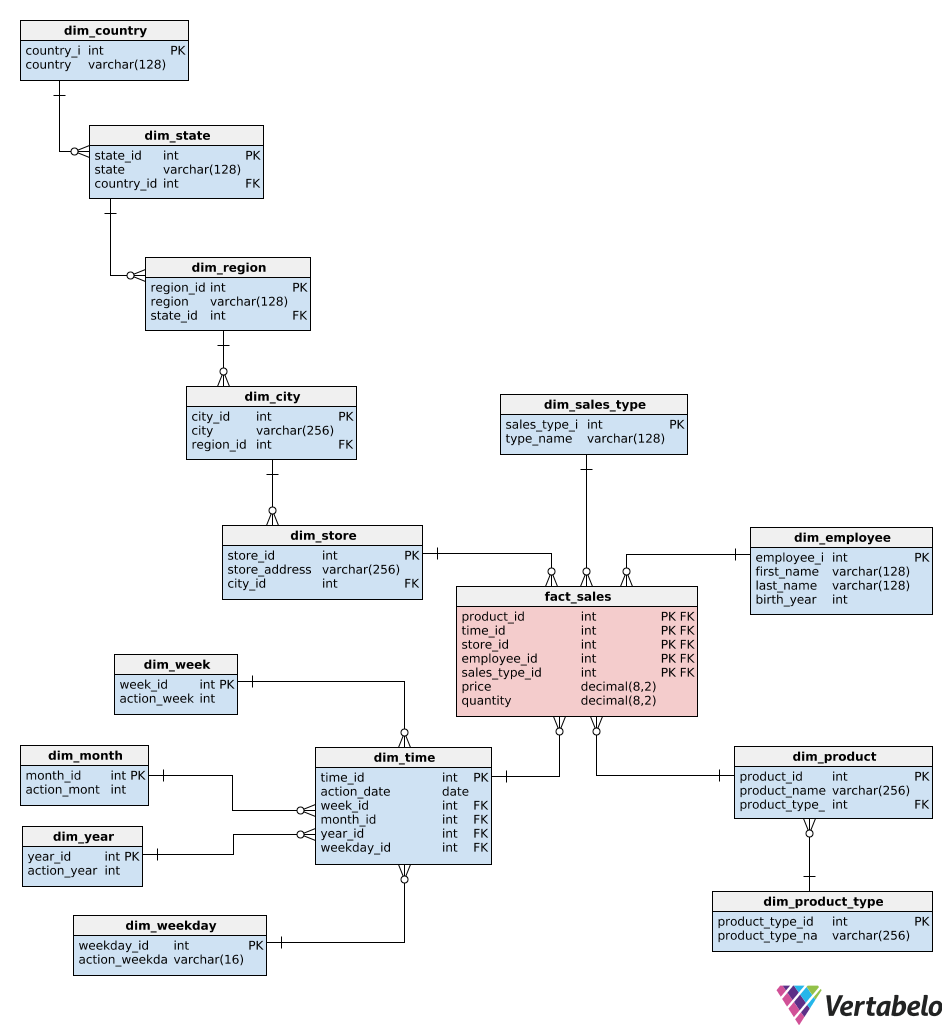

Three popular data models for data warehouses

- Star Schema

- Galaxy Schema

- Snowflake Schema

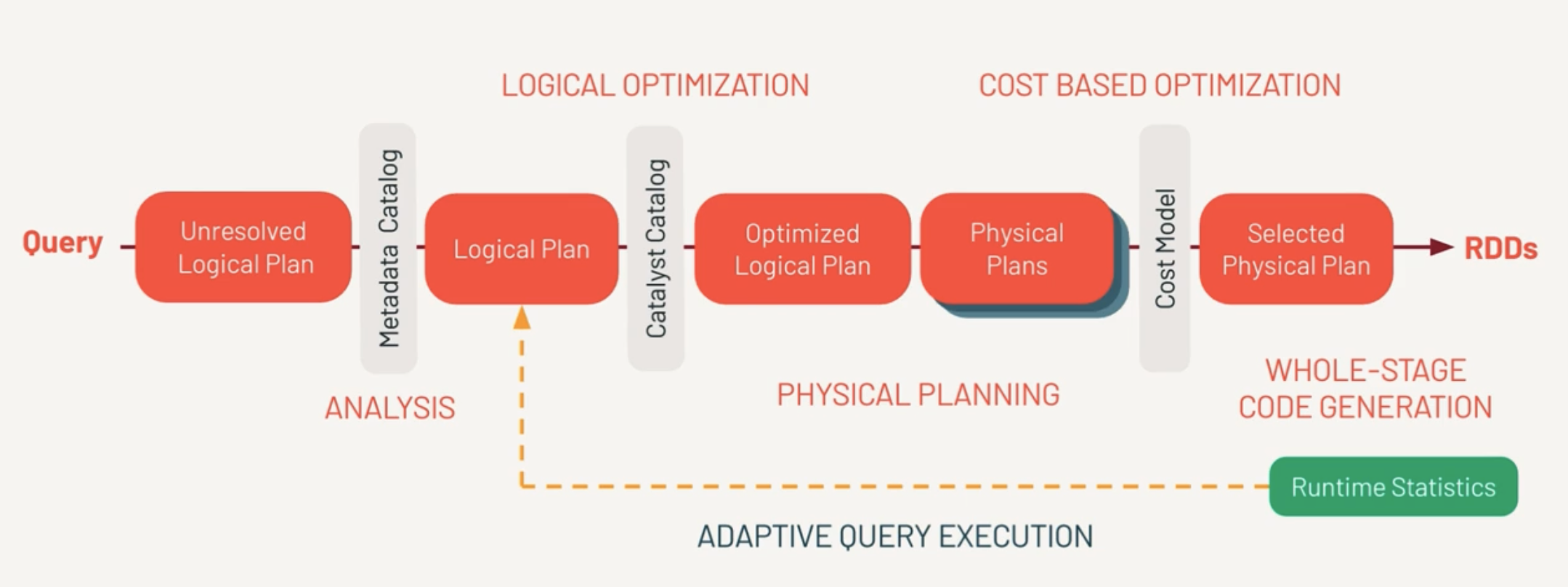

ETL / ELT Solution

ETL

- Extract

- Transform

- Load

ETL plays a vital part in moving data across. Many ways ETL can be implemented.

Popular ones

GUI Tools such as

- SSIS

- Pentaho

- Talend

- Scripting Tools such as Bash, Python.

ELT

- Extract

- Load

- Transform

In big data platforms such as Hadoop, and Spark, you can load a JSON, or CSV file and start using them as is. This technology can even parse compressed .gz / .bz2 files

Extensively used when dealing with Semi-Structured databases.

Register with Databricks community edition

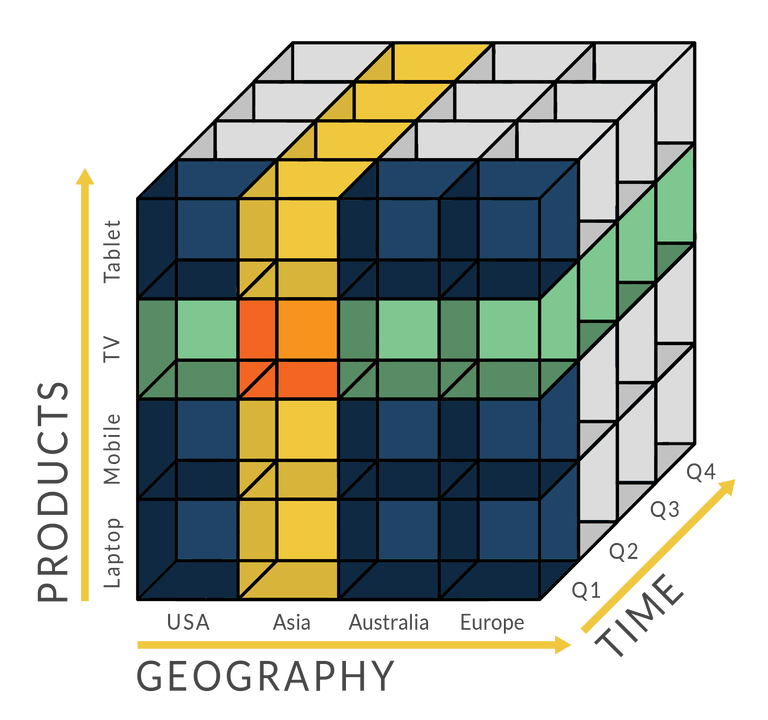

Online Analytic Processing

In OLAP cube data can be pre-calculated and pre-aggregated, making analysis faster.

Usually, data is organized in row and column format.

OLAP contains multi-dimensional data, with data from different data sources.

There are 4 types of analytical operations in OLAP

Roll-up: Consolidation, aggregation. Data from different cities can be rolled up to the state/country level.

Drill-down: Opposite of roll-up. If you have data by year, you can analyze monthly, weekly, and daily trends.

Slice-dice: Take one dimension of the data from the cube and create a sub-cube.

If data from various products / various quarters are available take one quarter alone and work with it.

Pivot: Rotating the data axes. Basically swapping the x and y-axis of the data.

Front End

Visualization - the primary reason for creating data warehouses.

Popular Premium BI tools.

- Tableau

- PowerBI

- Looker

Check Data Warehouse Tools for more details.

Query Optimization

These are some basic best practices. There is lot more to discuss in future sessions.

-

Retrieve only necessary rows.

-

Do not use *; instead, specify the columns.

-

Create views so users will control the data pull as well as security.

-

Filter first and Join later.

-

Filter first and Group later.

-

Monitor queries regularly for performance.

-

Index only when needed. Please don't index unwanted columns.

RDBMS

- Data Model

- Online vs Batch

- DSL vs GPL

- Storage Formats

- File Formats

- DuckDB

- DuckDB Sample - 01

- DuckDB Sample - 02

- DuckDB - Date Dimension

- Practice using SQLBolt

Data Model

Data Models: Is used to define how the logical structure of a database is modeled.

Entity: A Database entity is a thing, person, place, unit, object, or any item about which the data should be captured and stored in properties, workflow, and tables.

Attributes: Properties of Entity.

Example:

Entity: Student

Attributes: id, name, join date

Student

| student id | name | join date |

|---|---|---|

| 101 | Rachel Green | 2000-05-01 |

| 201 | Joey Tribianni | 1998-07-05 |

| 301 | Monica Geller | 1999-12-14 |

| 401 | Cosmo Kramer | 2001-06-05 |

Entity Relationship Model

Student

| student id | name | join date |

|---|---|---|

| 101 | Rachel Green | 2000-05-01 |

| 201 | Joey Tribianni | 1998-07-05 |

| 301 | Monica Geller | 1999-12-14 |

| 401 | Cosmo Kramer | 2001-06-05 |

Courses

| student id | semester | course |

|---|---|---|

| 101 | Semester 1 | DBMS |

| 101 | Semester 1 | Calculus |

| 201 | Semester 1 | Algebra |

| 201 | Semester 1 | Web |

One to One Mapping

erDiagram

Student {

int student_id PK

string name

date join_date

}

Studentdetails {

int student_id PK

string SSN

date DOB

}

Student ||--|| Studentdetails : "1 to 1"

Student

| student id | name | join date |

|---|---|---|

| 101 | Rachel Green | 2000-05-01 |

| 201 | Joey Tribianni | 1998-07-05 |

| 301 | Monica Geller | 1999-12-14 |

| 401 | Cosmo Kramer | 2001-06-05 |

Studentdetails

| student id | SSN | DOB |

|---|---|---|

| 101 | 123-56-7890 | 1980-05-01 |

| 201 | 236-56-4586 | 1979-07-05 |

| 301 | 365-45-9875 | 1980-12-14 |

| 401 | 148-89-4758 | 1978-06-05 |

For every row on the left-hand side, there will be only one matching entry on the right-hand side.

For student id 101, you will find one SSN and one DOB.

One to Many Mapping

erDiagram

Student {

int student_id PK

string name

date join_date

}

Address {

int address_id PK

int student_id FK

string address

string address_type

}

Student ||--o{ Address : "has"

Student

| student id | name | join date |

|---|---|---|

| 101 | Rachel Green | 2000-05-01 |

| 201 | Joey Tribianni | 1998-07-05 |

| 301 | Monica Geller | 1999-12-14 |

| 401 | Cosmo Kramer | 2001-06-05 |

Address

| student id | address id | address | address type |

|---|---|---|---|

| 101 | 1 | 1 main st, NY | Home |

| 101 | 2 | 4 john blvd,NJ | Dorm |

| 301 | 3 | 3 main st, NY | Home |

| 301 | 4 | 5 john blvd,NJ | Dorm |

| 201 | 5 | 12 center st, NY | Home |

| 401 | 6 | 11 pint st, NY | Home |

What do you notice here?

Every row on the left-hand side has one or more rows on the right-hand side.

For student id 101, you will notice the home address and Dorm address.

Many to Many Mapping

erDiagram

Student {

int student_id PK

string name

date join_date

}

Course {

string course_id PK

string course_name

}

StudentCourses {

int student_id FK

string course_id FK

}

Student ||--o{ StudentCourses : enrolls

Course ||--o{ StudentCourses : offered_in

Student

| student id | name | join date |

|---|---|---|

| 101 | Rachel Green | 2000-05-01 |

| 201 | Joey Tribianni | 1998-07-05 |

| 301 | Monica Geller | 1999-12-14 |

| 401 | Cosmo Kramer | 2001-06-05 |

Student Courses

| student id | course id |

|---|---|

| 101 | c1 |

| 101 | c2 |

| 301 | c1 |

| 301 | c3 |

| 201 | c3 |

| 401 | c4 |

Courses

| course id | course name |

|---|---|

| c1 | DataBase |

| c2 | Web Programming |

| c3 | Big Data |

| c4 | Data Warehouse |

What do you notice here?

Students can take more than one course, and courses can have more than one student.

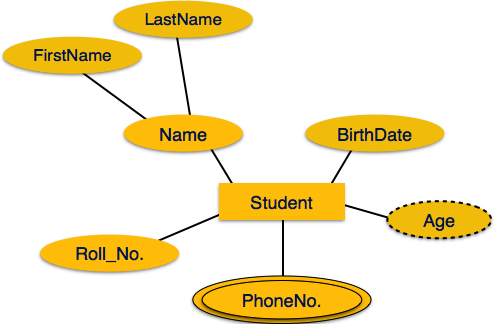

Attributes

Types of Attributes

Simple Attribute - Atomic Values.

An attribute that cannot be divided further.

Example: ssn

Composite Attribute

Made up of more than one simple attribute.

Example: firstname + mi + lastname

Derived Attribute

Calculated from existing attributes.

Age: Derived from DOB

Multivalued Attribute

An attribute that can have multiple values for a single entity (e.g., PhoneNumbers for a person).

src: https://www.tutorialspoint.com/dbms/er_diagram_representation.htm

Student: Entity

Name: Composite Attribute

Student ID: Simple Attribute

Age: Derived Attribute

PhoneNo: MultiValued Attribute

(The Student can have more than one phone number)

Keys

Primary Key

The Attribute helps to identify a row in an entity uniquely.

It cannot be empty and cannot have duplicates.

| student id | name | join date |

|---|---|---|

| 101 | Rachel Green | 2020-05-01 |

| 201 | Joey Tribianni | 1998-07-05 |

| 301 | Monica Geller | 1999-12-14 |

| 401 | Cosmo Kramer | 2001-06-05 |

Based on the above data, which attribute can be PK?

any column (as its just four rows)

If we extend the dataset to 10000 rows, which can be PK?

yes student id

Composite Key

A Primary key that consists of two or more attributes is known as a **** composite key.

| student id | course id | name |

|---|---|---|

| 101 | C1 | Rachel Green |

| 101 | C2 | Rachel Green |

| 201 | C2 | Monica Geller |

| 201 | C3 | Cosmo Kramer |

Do you know how to find the unique row?

The combination of StudentID + CourseID makes it unique.

Unique Key

Unique keys are similar to Primary Key, except it can allow one NULL.

Transaction

What is a Transaction?

A transaction can be defined as a group of tasks.

A simple task is the minimum processing unit that cannot be divided further.

Example: Transfer $500 from X account to Y account.

Open_Account(X)

Old_Balance = X.balance

New_Balance = Old_Balance - 500

X.balance = New_Balance

Close_Account(X)

Open_Account(Y)

Old_Balance = B.balance

New_Balance = Old_Balance + 500

Y.balance = New_Balance

Close_Account(Y)

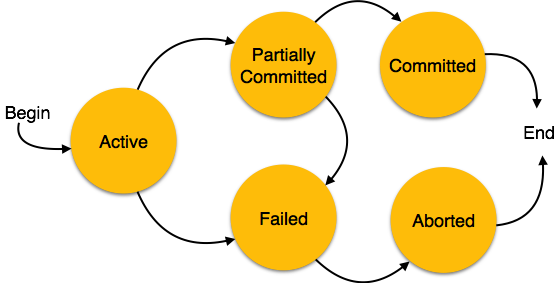

States of Transaction

src: https://www.tutorialspoint.com/dbms/dbms_transaction.htm

src: www.guru99.com

- Active: When the transaction's instructions are running, the transaction is active.

- Partially Committed: After completing all the read and write operations, the changes are made in the main memory or local buffer.

- Committed: It is the state in which the changes are made permanent on the DataBase.

- Failed: When any instruction of the transaction fails.

- Aborted: The changes are only made to the local buffer or main memory; hence, these changes are deleted.

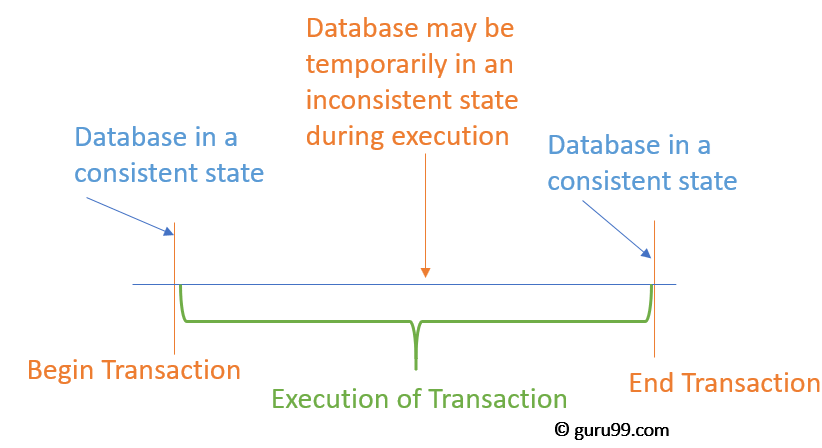

ACID

ACID Complaint

Atomicity: All or none. Changes all or none.

Consistency: Never leaves the database in a half-finished state. Deleting a customer is not possible before deleting related invoices.

Isolation: Separates a transaction from another. Transactions are isolated; they occur independently without interference. One change will not be visible to another transaction.

Durability: Recover from an abnormal termination. Once the transaction is completed, data is written to disk and persists when the system fails. DB returns to a consistent state when restarted due to abnormal termination.

Example

Atomicity

Example: Transferring money between two bank accounts. If you transfer $100 from Account A to Account B, the transaction must ensure that either the debit from Account A and credit to Account B occur or neither occurs. If any error happens during the transaction (e.g., system crash), the transaction will be rolled back, ensuring that no partial transaction is completed.

Consistency

Example: Enforcing database constraints, such as ensuring that a field that stores a percentage can only hold values between 0 and 100. If an operation tries to insert a value of 110, it will fail because it violates the consistency rule of the database schema.

Isolation:

Example: Two transactions that concurrently update the same set of rows in a database. Transaction A updates a row; before it commits, Transaction B also tries to update the same row. Depending on the isolation level (e.g., Serializable), Transaction B may be required to wait until Transaction A commits or rolls back to avoid data inconsistencies, ensuring that transactions do not interfere with each other.

Durability:

Example: After a transaction is committed, such as a user adding an item to a shopping cart in an e-commerce application, the data must be permanently saved to the database. Even if the server crashes immediately after the commit, the added item must remain in the shopping cart once the system is back online.

Online/Realtime vs Batch

Online Processing

An online system handles transactions when they occur and provides output directly to users. It is interactive.

Use Cases

E-commerce Websites:

Use Case: Processing customer orders. Example: When a customer places an order, the system immediately updates inventory, processes the payment, and provides an order confirmation in real time. Any delay could lead to issues like overselling stock or customer dissatisfaction.

Online Banking:

Use Case: Fund transfers and account balance updates. Example: When a user transfers money between accounts, the transaction is processed immediately, and the balance is updated in real-time. Real-time processing is crucial to ensure the funds are available immediately and that the account balance reflects the latest transactions.

Social Media Platforms:

Use Case: Posting updates and notifications. Example: When a user posts a new status or comment, it should be instantly visible to their followers. Notifications about likes, comments, or messages are also delivered in real time to maintain user engagement.

Ride-Sharing Services (e.g., Uber, Lyft):

Use Case: Matching drivers with passengers. Example: When a user requests a ride, the system matches them with a nearby driver in real time, providing immediate feedback on the driver's location and estimated arrival time.

Fraud Detection Systems:

Use Case: Monitoring transactions for fraudulent activities. Example: Credit card transactions are monitored in real time to detect unusual patterns and prevent fraud before they are completed.

Batch Processing

Data is processed in groups or batches. Batch processing is typically used for large amounts of data that must be processed on a routine schedule, such as paychecks or credit card transactions.

A batch processing system has several main characteristics: collect, group, and process transactions periodically.

Batch programs require no user involvement and require significantly fewer network resources than online systems.

Use Cases

End-of-Day Financial Processing:

Use Case: Reconciling daily transactions. Example: Banks often batch process all the day's transactions at the end of the business day to reconcile accounts, generate statements, and update records. This processing doesn't need to be real-time but must be accurate and comprehensive.

Data Warehousing and ETL Processes:

Use Case: Extracting, transforming, and loading data into a data warehouse. Example: A retail company may extract sales data from various stores, transform it to match the warehouse schema, and load it into a centralized data warehouse. This process is typically done in batches overnight to prepare the data for reporting and analysis the next day.

Payroll Processing:

Use Case: Calculating employee salaries. Example: Payroll systems typically calculate and process salaries in batches, often once per pay period. Employee data (hours worked, overtime, deductions) is collected over the period and processed in a single batch job.

Inventory Updates:

Use Case: Updating inventory levels across multiple locations. Example: A chain of retail stores might batch-process inventory updates at the end of each day. Each store's sales data is sent to a central system, where inventory levels are adjusted in batches to reflect the day's sales.

Billing Systems:

Use Case: Generating customer bills. Example: Utility companies often generate customer bills at the end of each month. Usage data is collected throughout the month, and bills are generated in batch processing jobs, which are then sent to customers.

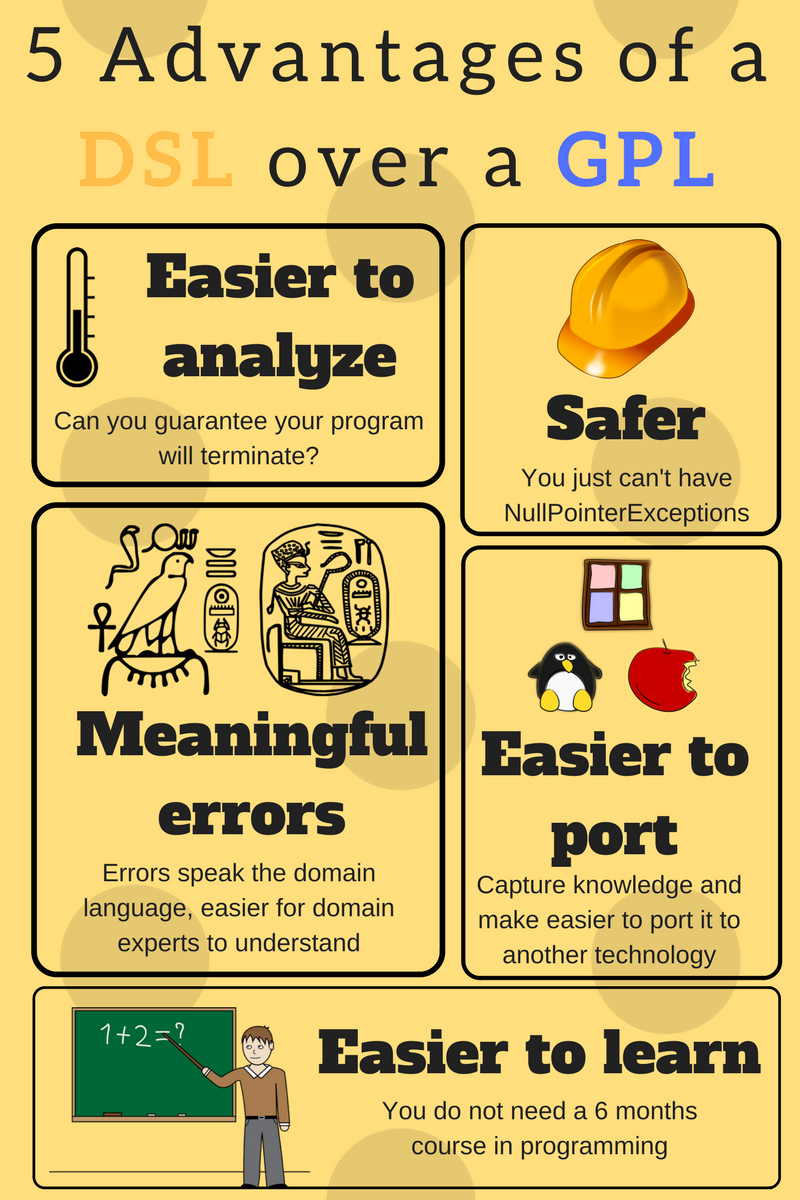

DSL vs GPL

GPL - General Programming Language. Python / JAVA / C++

One tool can be used to do many things.

DSL - Domain-Specific Language. HTML / SQL / JQ / AWK /...

Specific tools to do a specific job.

src: https://tomassetti.me/domain-specific-languages/"

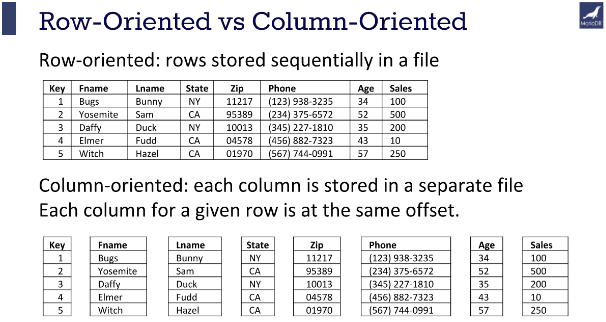

Storage Formats

| Account number | Last name | First name | Purchase (in dollars) |

|---|---|---|---|

| 1001 | Green | Rachel | 20.12 |

| 1002 | Geller | Ross | 12.25 |

| 1003 | Bing | Chandler | 45.25 |

Row Oriented Storage

In a row-oriented DBMS, the data would be stored as

1001,Green,Rachel,20.12;1002,Geller,Ross,12.25;1003,Bing,Chandler,45.25

Best suited for OLTP - Transaction data.

Columnar Oriented Storage

1001,1002,1003;Green,Geller,Bing;Rachel,Ross,Chandler;20.12,12.25,45.25

Best suited for OLAP - Analytical data.

-

Compression: Since the data in a column tends to be of the same type (e.g., all integers, all strings), and often similar values, it can be compressed much more effectively than row-based data.

-

Query Performance: Queries that only access a subset of columns can read just the data they need, reducing disk I/O and significantly speeding up query execution.

-

Analytic Processing: Columnar storage is well-suited for analytical queries and data warehousing, which often involve complex calculations over large amounts of data. Since these queries often only affect a subset of the columns in a table, columnar storage can lead to significant performance improvements.

src: https://mariadb.com/resources/blog/why-is-columnstore-important/

File Formats

CSV/TSV

Pros

- Tabular Row storage.

- Human-readable is easy to edit manually.

- Simple schema.

- Easy to implement and parse the file(s).

Cons

- No standard way to present binary data.

- No complex data types.

- Large in size.

JSON

Pros

- Supports hierarchical structure.

- Most languages support them.

- Widely used in Web

Cons

- More memory usage due to repeatable column names.

- Not very splittable.

- Lacks indexing.

Parquet

Parquet is a columnar storage file format optimized for use with Apache Hadoop and related big data processing frameworks. Twitter and Cloudera developed it to provide a compact and efficient way of storing large, flat datasets.

Best for WORM (Write Once Read Many).

The key features of Parquet are:

- Columnar Storage: Parquet is optimized for columnar storage, unlike row-based files like CSV or TSV. This allows it to efficiently compress and encode data, which makes it a good fit for storing data frames.

- Schema Evolution: Parquet supports complex nested data structures, and the schema can be modified over time. This provides much flexibility when dealing with data that may evolve.

- Compression and Encoding: Parquet allows for highly efficient compression and encoding schemes. This is because columnar storage makes better compression and encoding schemes possible, which can lead to significant storage savings.

- Language Agnostic: Parquet is built from the ground up for use in many languages. Official libraries are available for reading and writing Parquet files in many languages, including Java, C++, Python, and more.

- Integration: Parquet is designed to integrate well with various big data frameworks. It has deep support in Apache Hadoop, Apache Spark, and Apache Hive and works well with other data processing frameworks.

In short, Parquet is a powerful tool in the big data ecosystem due to its efficiency, flexibility, and compatibility with a wide range of tools and languages.

Difference between CSV and Parquet

| Aspect | CSV (Comma-Separated Values) | Parquet |

|---|---|---|

| Data Format | Text-based, plain text | Columnar, binary format |

| Compression | Usually uncompressed (or lightly compressed) | Highly compressed |

| Schema | None, schema-less | Strong schema enforcement |

| Read/Write Efficiency | Row-based, less efficient for column operations | Column-based, efficient for analytics |

| File Size | Generally larger | Typically smaller due to compression |

| Storage | More storage space required | Less storage space required |

| Data Access | Good for sequential access | Efficient for accessing specific columns |

| Example Size (1 GB) | Could be around 1 GB or more depending on compression | Could be 200-300 MB (due to compression) |

| Use Cases | Simple data exchange, compatibility | Big data analytics, data warehousing |

| Support for Data Types | Limited to text, numbers | Rich data types (int, float, string, etc.) |

| Processing Speed | Slower for large datasets, particularly for queries on specific columns | Faster, especially for column-based queries |

| Tool Compatibility | Supported by most tools, databases, and programming languages | Supported by big data tools like Apache Spark, Hadoop, etc. |

Parquet Compression

- Snappy (default)

- Gzip

Snappy

- Low CPU Util

- Low Compression Rate

- Splittable

- Use Case: Hot Layer

- Compute Intensive

GZip

- High CPU Util

- High Compression Rate

- Splittable

- Use Case: Cold Layer

- Storage Intensive

DuckDB

DuckDB is an in-process SQL database management system designed for efficient analytical query processing.

Its a single file DB, no need of Client / Server setup.

Its like SQLLite on Steroids.

Install Duck DB

https://duckdb.org/#quickinstall

Key Features

In-Process Execution: DuckDB operates entirely within your application process, eliminating the need for a client-server setup. This makes it highly efficient for embedded analytics.

Columnar Storage: DuckDB uses a columnar storage format, unlike traditional row-based databases. This allows it to handle analytical queries more efficiently, as operations can simultaneously be performed on entire columns.

SQL Compatibility: DuckDB's wide range of SQL features makes it a breeze to use for anyone familiar with SQL. It can handle complex queries, joins, and aggregations without requiring specialized query syntax, ensuring a comfortable and familiar experience.

Integration and Ease of Use: DuckDB is designed to integrate easily into various environments. It supports multiple programming languages, including Python, R, and C++, and can be used directly within data science workflows.

Scalability: DuckDB, while optimized for in-process execution, is more than capable of scaling to handle large datasets. This reassures you that it's a reliable choice for lightweight analytics and exploratory data analysis (EDA).

Extensibility: The database supports user-defined functions (UDFs) and extensions, allowing you to extend its capabilities to fit your needs.

Limitations

- Limited Transaction Support

- Concurrency Limitations

- No Client-Server Model

- Lack of Advanced RDBMS Features

- Not Designed for Massive Data Warehousing

Duckdb Sample - 01

Launch Duckdb

duckdb

.open newdbname.duckdb

CREATE TABLE employees (

id INTEGER PRIMARY KEY,

name VARCHAR NOT NULL,

email VARCHAR UNIQUE,

department VARCHAR,

salary DECIMAL(10, 2) CHECK (salary > 0)

);

INSERT INTO employees (id,name, email, department, salary) VALUES

(1,'Rachel Green', 'rachel.green@friends.com', 'Fashion', 55000.00);

INSERT INTO employees (id,name, email, department, salary) VALUES

(2,'Monica Geller', 'monica.geller@friends.com', 'Culinary', 62000.50);

INSERT INTO employees (id,name, email, department, salary) VALUES

(3,'Phoebe Buffay', 'phoebe.buffay@friends.com', 'Massage Therapy', 48000.00);

INSERT INTO employees (id,name, email, department, salary) VALUES

(4,'Joey Tribbiani', 'joey.tribbiani@friends.com', 'Acting', 40000.75);

INSERT INTO employees (id,name, email, department, salary) VALUES

(5,'Chandler Bing', 'chandler.bing@friends.com', 'Data Analysis', 70000.25);

INSERT INTO employees (id,name, email, department, salary) VALUES

(6,'Ross Geller', 'ross.geller@friends.com', 'Paleontology', 65000.00);

select * from employees

- .databases or show databases;

- .tables or show tables;

- .mode list

- .mode table

- .mode csv

- summarize employees;

- summarize select name,email from employees;

- .exit

CREATE SEQUENCE dept_id_seq;

drop table if exists departments;

CREATE TABLE departments (

id INTEGER PRIMARY KEY DEFAULT nextval('dept_id_seq'),

dept_name VARCHAR NOT NULL

);

insert into departments(dept_name) values('Fashion');

insert into departments(dept_name) values('Culinary');

insert into departments(dept_name) values('Massage');

insert into departments(dept_name) values('Acting');

insert into departments(dept_name) values('Data Analysis');

insert into departments(dept_name) values('Paleontology');

CREATE SEQUENCE emp_id_seq;

DROP TABLE if exists employees;

CREATE TABLE if not exists employees (

id INTEGER PRIMARY KEY DEFAULT nextval('emp_id_seq'),

name VARCHAR NOT NULL,

email VARCHAR UNIQUE,

department VARCHAR,

salary DECIMAL(10, 2) CHECK (salary > 0),

hire_date DATE DEFAULT CURRENT_DATE,

);

INSERT INTO employees (name, email, department, salary) VALUES

('Rachel Green', 'rachel.green@friends.com', 'Fashion', 55000.00);

INSERT INTO employees (name, email, department, salary) VALUES

('Monica Geller', 'monica.geller@friends.com', 'Culinary', 62000.50);

INSERT INTO employees (name, email, department, salary) VALUES

('Phoebe Buffay', 'phoebe.buffay@friends.com', 'Massage Therapy', 48000.00);

INSERT INTO employees (name, email, department, salary) VALUES

('Joey Tribbiani', 'joey.tribbiani@friends.com', 'Acting', 40000.75);

INSERT INTO employees (name, email, department, salary) VALUES

('Chandler Bing', 'chandler.bing@friends.com', 'Data Analysis', 70000.25);

INSERT INTO employees (name, email, department, salary) VALUES

('Ross Geller', 'ross.geller@friends.com', 'Paleontology', 65000.00);

INSERT INTO employees (id,name, email, department, salary) VALUES

(8,'Ben Geller', 'ben.geller@friends.com', 'Student', 1.00);

INSERT INTO employees (name, email, department, salary) VALUES

('Emma Green', 'emma.green@friends.com', 'Kid', 1.00);

CREATE SEQUENCE emp_dept_id_seq;

CREATE TABLE emp_dept (

id INTEGER PRIMARY KEY DEFAULT nextval('emp_dept_id_seq'),

dept_id INTEGER NOT NULL,

emp_id INTEGER NOT NULL,

FOREIGN KEY (dept_id) REFERENCES departments(id),

FOREIGN KEY (emp_id) REFERENCES employees(id)

);

INSERT INTO emp_dept(emp_id,dept_id) VALUES (1,1),(2,2),(3,3),(4,4),(5,5),(6,6);

INSERT INTO emp_dept(emp_id,dept_id) VALUES (9,7);

CREATE SEQUENCE product_id_seq;

CREATE TABLE products (

id INTEGER PRIMARY KEY DEFAULT nextval('product_id_seq'),

name VARCHAR NOT NULL,

price DECIMAL(10, 2),

discounted_price DECIMAL(10, 2) GENERATED ALWAYS AS (price * 0.9) VIRTUAL

);

CREATE SEQUENCE orders_id_seq;

CREATE TABLE orders (

id INTEGER PRIMARY KEY DEFAULT nextval('orders_id_seq'),

customer_name VARCHAR,

items INTEGER[],

shipping_address STRUCT(

street VARCHAR,

city VARCHAR,

zip VARCHAR

)

);

INSERT INTO orders (customer_name, items, shipping_address) VALUES

('John Doe', [1, 2, 3], {'street': '123 Elm St', 'city': 'Springfield', 'zip': '11111'}),

('Jane Smith', [3, 4, 5], {'street': '456 Oak St', 'city': 'Greenville', 'zip': '22222'}),

('Emily Johnson', [6, 7, 8, 9], {'street': '789 Pine St', 'city': 'Fairview', 'zip': '33333'});

Query Orders

select * from orders;

select id,customer_name,items,shipping_address.city from orders;

select id,customer_name,items,shipping_address['city'] from orders;

select id,customer_name,shipping_address.* from orders;

-- Array

select id,customer_name,len(items) from orders;

select id,customer_name,list_contains(items,3) from orders;

select id,customer_name,list_distinct(items) from orders;

select id,customer_name,unnest(items),shipping_address.* from orders;

More Functions

https://duckdb.org/docs/sql/functions/list

select current_catalog(), current_schema();

-- Returns the name of the currently active catalog. Default is memory. -- Returns the name of the currently active schema. Default is main.

Query Github Directly

select * from read_parquet('https://github.com/duckdb/duckdb-data/releases/download/v1.0/userdata1.parquet');

select * from read_parquet('https://github.com/duckdb/duckdb-data/releases/download/v1.0/orders.parquet') limit 5;

select * from read_parquet('https://github.com/duckdb/duckdb-data/releases/download/v1.0/city_temperature.parquet') limit 5;

Create DuckDB table

create table orders as select * from read_parquet('https://github.com/duckdb/duckdb-data/releases/download/v1.0/orders.parquet');

describe table

describe orders;

select * from read_json('https://github.com/duckdb/duckdb-data/releases/download/v1.0/canada.json') limit 5;

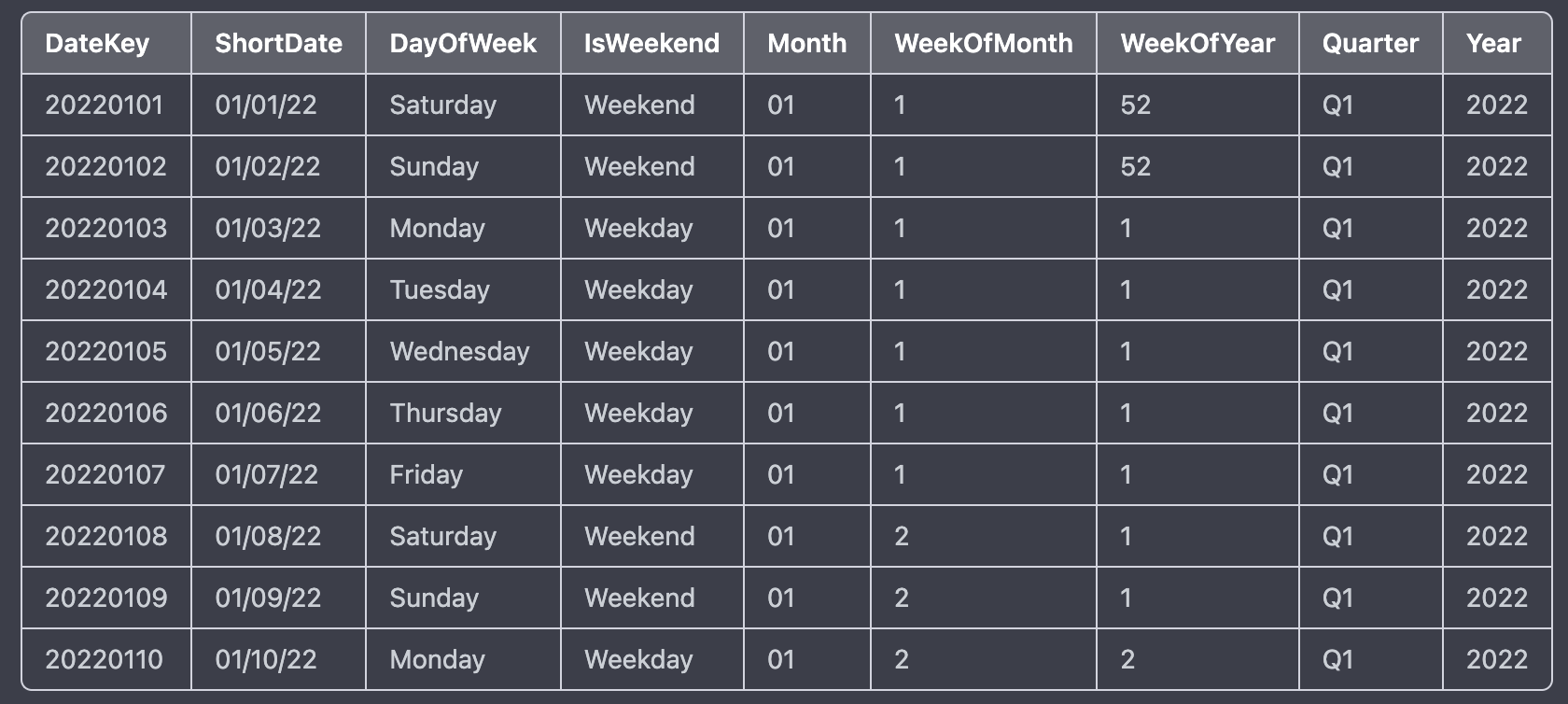

DuckDB Arrays, CTE & DATE Dimension

select generate_series(1,100,12) as num;

select generate_series(DATE '2024-09-01', DATE '2024-09-10', INTERVAL 1 DAY) as dt;

with date_cte as(

select strftime(unnest(generate_series(DATE '2024-09-01', DATE '2024-09-10', INTERVAL 1 DAY)),'%Y-%m-%d') as dt

)

SELECT dt FROM date_cte;

WITH date_cte AS (

SELECT unnest(generate_series(DATE '2024-09-01', DATE '2024-09-10', INTERVAL 1 DAY)) AS dt

)

SELECT

REPLACE(CAST(CAST(dt AS DATE) AS VARCHAR), '-', '') AS DateKey

,CAST(dt AS DATE) AS ShortDate

,DAYNAME(dt) AS DayOfWeek

,CASE

WHEN EXTRACT(DOW FROM dt) IN (0, 6) THEN 'Weekend'

ELSE 'Weekday'

END AS IsWeekend

,CAST(CEIL(EXTRACT(DAY FROM dt) / 7.0) AS INTEGER) AS WeekOfMonth

,EXTRACT(WEEK FROM dt) AS WeekOfYear

,'Q' || CAST(EXTRACT(QUARTER FROM dt) AS VARCHAR) AS Quarter

,CASE

WHEN strftime(dt, '%m') IN ('01', '02', '03') THEN 'Q1'

WHEN strftime(dt, '%m') IN ('04', '05', '06') THEN 'Q2'

WHEN strftime(dt, '%m') IN ('07', '08', '09') THEN 'Q3'

ELSE 'Q4'

END AS Quarter_alternate

,EXTRACT(YEAR FROM dt) AS Year

,EXTRACT(MONTH FROM dt) AS Month

,EXTRACT(Day FROM dt) AS Day

FROM date_cte

ORDER BY dt;

Practice using SQLBolt

https://sqlbolt.com/lesson/select_queries_introduction

The answer Key (if needed)

Cloud

Overview

Definitions

Hardware: physical computer / equipment / devices

Software: programs such as operating systems, word, Excel

Web Site: Readonly web pages such as company pages, portfolios, newspapers

Web Application: Read Write - Online forms, Google Docs, email, Google apps

Cloud Plays a significant role in the Big Data world.

In today's market, Cloud helps companies to accommodate the ever-increasing volume, variety, and velocity of data.

Cloud Computing is a demand delivery of IT resources over the Internet through Pay Per Use.

Src : https://thinkingispower.com/the-blind-men-and-the-elephant-is-perception-reality/

Without Cloud knowledge, knowing Bigdata will be something like the above picture.

- Volume: Size of the data.

- Velocity: Speed at which new data is generated.

- Variety: Different types of data.

- Veracity: Trustworthiness of the data.

- Value: Usefulness of the data.

- Vulnerability: Security and privacy aspects.

When people focus on only one aspect without the help of cloud technologies, they miss out on the comprehensive picture. Cloud solutions offer ways to manage all these dimensions in an integrated manner, thus providing a fuller understanding and utilization of Big Data.

Advantages of Cloud Computing for Big Data

- Cost Savings

- Security

- Flexibility

- Mobility

- Insight

- Increased Collaboration

- Quality Control

- Disaster Recovery

- Loss Prevention

- Automatic Software Updates

- Competitive Edge

- Sustainability

Types of Cloud Computing

Public Cloud

Owned and operated by third-party providers. (AWS, Azure, GCP, Heroku, and a few more)

Private Cloud

Cloud computing resources are used exclusively by a single business or organization.

Hybrid

Public + Private: By allowing data and applications to move between private and public clouds, a hybrid cloud gives your business greater flexibility and more deployment options, and helps optimize your existing infrastructure, security, and compliance.

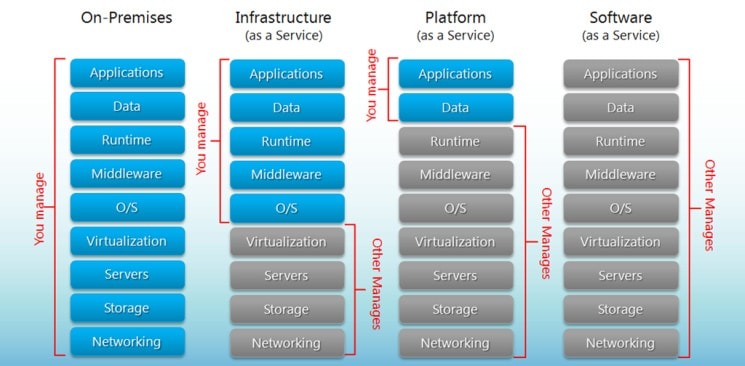

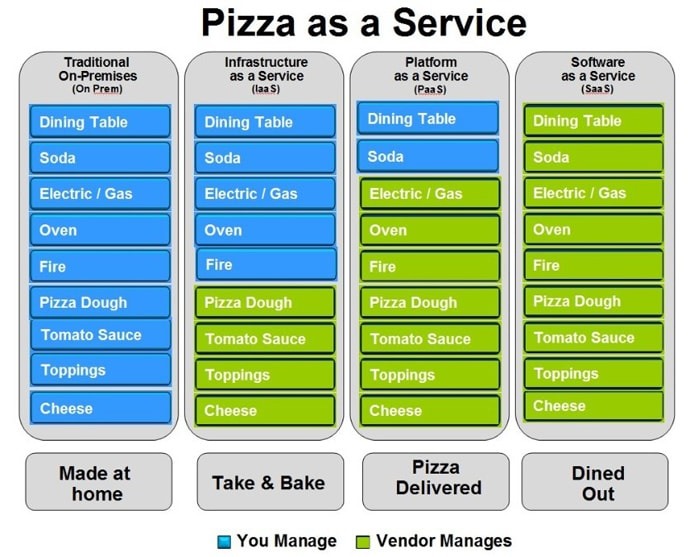

Types of Cloud Services

SaaS

Software as a Service

Cloud-based service providers offer end-user applications. Google Apps, DropBox, Slack, etc.

- Web access to Software (primarily commercial).

- Software is managed from a central location.

- Delivery 1 - many models.

- No patches, No upgrades

When not to use

- Hardware integration is needed. (Price Scanner)

- Faster processing is required.

- Cannot host data outside the premise.

PaaS

Platform as a Service

Software tools are available over the internet. AWS RDS, Heroku, Salesforce

- Scalable

- Built on Virtualization Technology

- No User needed to maintain software. (DB upgrades, patches by cloud team)

When not to use PaaS

- Propriety tools don't allow moving to diff providers. (AWS-specific tools)

- Using new software that is not part of the PaaS toolset.

IaaS

Infrastructure as a Service

Cloud-based hardware services. Pay-as-you-go services for Storage, Networking, and Servers.

Amazon EC2, Google Compute Engine, S3.

- Highly flexible and scalable.

- Accessible by more than one user.

- Cost-effective (if used right).

Serverless computing

Focuses on building apps without spending time managing servers/infrastructure.

Feature automatic scaling, built-in high availability, and pay-per-use.

Use of resources when a specific function or event occurs.

Cloud providers handle the deployment, and capacity, and manage the servers.

Example: AWS Lambda, AWS Step Functions.

Easy way to remember SaaS, PaaS, IaaS

bigcommerce.com

Challenges of Cloud Computing

Privacy: "Both traditional and Big Data sets often contain sensitive information, such as addresses, credit card details, or social security numbers."

So, it's the responsibility of users to ensure proper security methods are followed.

Compliance: Cloud providers replicate data across regions to ensure safety. If companies have regulations that data should not be stored outside their organization or should not be stored in a specific part of the world.

Data Availability: Everything is dependent on the Internet and speed. It is also dependent on the choice of the cloud provider. Big companies like AWS / GCP / Azure have more data centers and backup facilities.

Connectivity: Internet availability + speed.

Vendor lock-in: Once an organization has migrated its data and applications to the cloud, switching to a different provider can be difficult and expensive. This is known as vendor lock-in. Some cloud agnostic tools like Databricks help enterprises to mitigate this problem, but still, its a challenge.

Cost: Cloud computing can be a cost-effective way to deploy and manage IT resources. However, it is essential to carefully consider your needs and budget before choosing a cloud provider.

Continuous Training: Employees may need to be trained to use cloud-based applications. This can be a cost and time investment.

Constant Change in Technology: Cloud providers constantly improve or change their technology. Recently, Microsoft decided to decommission Synapse and launch a new tool called Fabric.

AWS

Terms to Know

Elasticity The ability to acquire resources as you need them and release resources when you no longer need them.

Scale Up vs. Scale Down

Scale-Out vs. Scale In

Latency

Typically latency is a measurement of a round-trip between two systems, such as how long it takes data to make its way between two.

Root User

Owner of the AWS account.

IAM

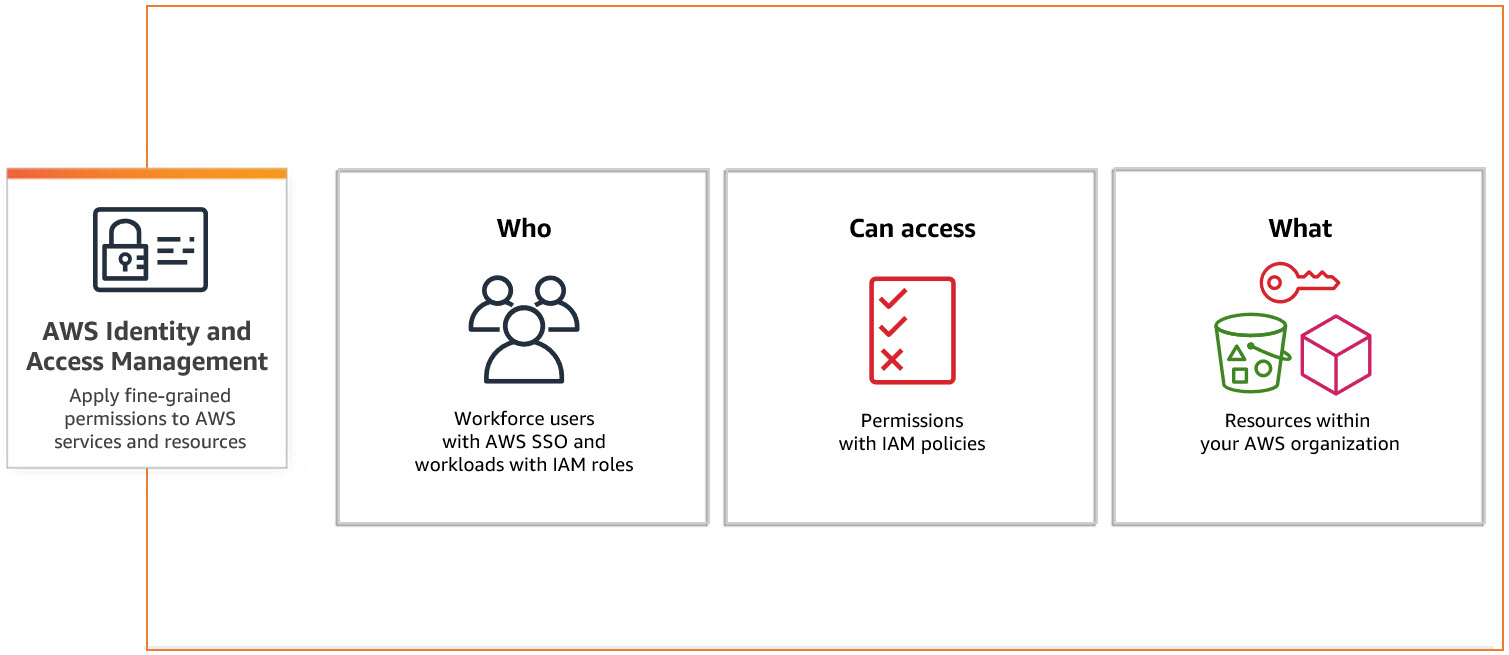

Identity Access Management

ARN

Amazon Resource Name

For example

arn:aws:iam::123456789012:user/Development/product_1234/*

Policy

Rules

AWS Popular Services

Amazon EC2

Allows you to deploy virtual servers within your AWS environment.

Amazon VPC

An isolated segment of the AWS cloud accessible by your own AWS account.

Amazon S3

A fully managed, object-based storage service that is highly available, highly durable, cost-effective, and widely accessible.

AWS IAM (Identify and Access Mgt)

Used to manage permissions to your AWS resources

AWS Management Services

Amazon EC2 Auto Scaling

Automatically increases or decreases your EC2 resources to meet the demand based on custom-defined metrics and thresholds.

Amazon CloudWatch

A comprehensive monitoring tool allows you to monitor your services and applications in the cloud.

Elastic Load Balancing

Used to manage and control the flow of inbound requests to a group of targets by distributing these requests evenly across a targeted resource group.

Billing & Budgeting

Helps control the cost.

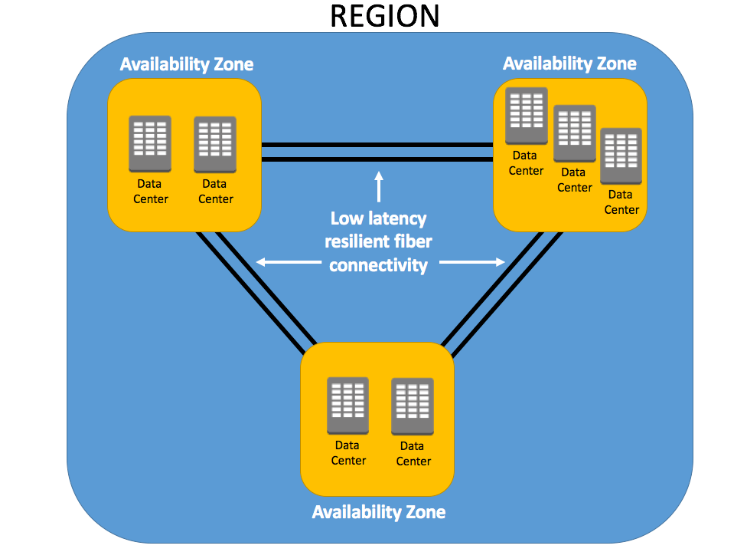

AWS Global Infrastructure

The Primary two items are given below.

- Availability Zones

- Regions

Availability Zones (AZs)

AZs are the physical data centers of AWS.

This is where the actual computing, storage, network, and database resources are hosted that we as consumers, provision within our Virtual Private Clouds (VPCs).

A common misconception is that a single availability zone equals a single data center. Multiple data centers located closely form a single availability zone.

Each AZ will have another AZ in the same geographical area. Each AZ will be isolated from others using a separate power/network like DR.

Many AWS services use low latency links between AZs to replicate data for high availability and resilience purposes.

Multiple AZs are defined as an AWS Regions. (Example: Virginia)

Regions

Every Region will act independently of the others, containing at least two Availability Zones.

Interestingly, only some AWS services are available in some regions.

- US East (N. Virginia) us-east-1

- US East (Ohio) us-east-2

- EU (Ireland) eu-west-1

- EU (Frankfurt) eu-central-1

Note: As of today, AWS is available in 27 regions and 87 AZs

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/Concepts.RegionsAndAvailabilityZones.html"

EC2

(Elastic Cloud Compute)

Compute: Closely related to CPU/RAM

Elastic Compute Cloud (EC2): AWS EC2 provides resizable compute capacity in the cloud, allowing you to run virtual servers as per your needs.

Instance Types: EC2 offers various instance types optimized for different use cases, such as general purpose, compute-optimized, memory-optimized, and GPU instances.

Pricing Models

On-Demand: Pay for computing capacity by the hour or second.

Reserved: Commit to a one or 3-year term and get a discount.

Spot: Bid for unused EC2 capacity at a reduced cost.

Savings Plans: Commit to consistent compute usage for lower prices. AMI (Amazon Machine Image): Pre-configured templates for your EC2 instances, including the operating system, application server, and applications.

Security

Security Groups: Act as a virtual firewall for your instances to control inbound and outbound traffic.

Key Pairs: These are used to access your EC2 instances via SSH or RDP securely.

Elastic IPs: These are static IP addresses that can be associated with EC2 instances. They are useful for hosting services that require a consistent IP.

Auto Scaling: Automatically adjusts the number of EC2 instances in response to changing demand, ensuring you only pay for what you need.

Elastic Load Balancing (ELB): Distributes incoming traffic across multiple EC2 instances, improving fault tolerance and availability.

EBS (Elastic Block Store): Provides persistent block storage volumes for EC2 instances, allowing data to be stored even after an instance is terminated.

Regions and Availability Zones: EC2 instances can be deployed in various geographic regions, each with multiple availability zones for high availability and fault tolerance.

Storage

Persistent Storage

- Elastic Block Storage (EBS) Volumes / Logically attached via AWS network.

- Automatically replicated.

- Encryption is available.

Ephemeral Storage - Local storage

- Physically attached to the underlying host.

- When the instance is stopped or terminated, all the data is lost.

- Rebooting will keep the data intact.

DEMO - Deploy EC2

S3

(Simple Storage Service)

It's an IaaS service.

- Highly Available

- Durable

- Cost Effective

- Widely Accessible

- Uptime of 99.99%

-

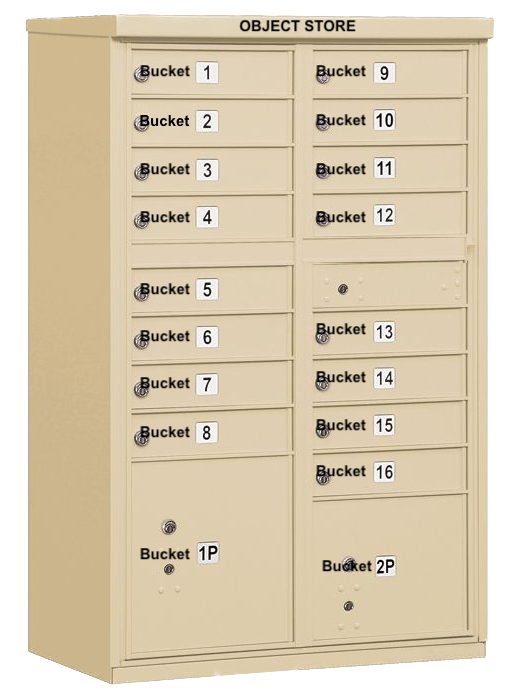

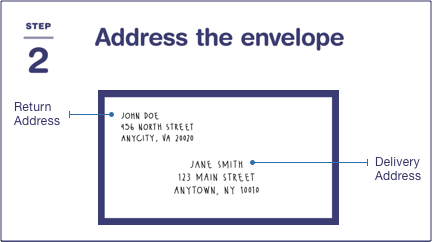

Objects and Buckets: The fundamental elements of Amazon S3 are objects and buckets. Objects are the individual data pieces stored in Amazon S3, while buckets are containers for these objects. An object consists of a file and, optionally, any metadata that describes that file.

-

It's also a regional service, meaning that when you create a bucket, you specify a region, and all objects are stored there.

-

Globally Unique: The name of an Amazon S3 bucket must be unique across all of Amazon S3, that is, across all AWS customers. It's like a domain name.

-

Globally Accessible: Even though you specify a particular region when you create a bucket, once the bucket is created, you can access it from anywhere in the world using the appropriate URL.

-

-

Scalability: Amazon S3 can scale in terms of storage, request rate, and users to support unlimited web-scale applications.

-

Security: Amazon S3 includes several robust security features, such as encryption for data at rest and in transit, access controls like Identity and Access Management (IAM) policies, bucket policies, and Access Control Lists (ACLs), and features for monitoring and logging activity, like AWS CloudTrail.

-

Data transfer: Amazon S3 supports transfer acceleration, which speeds up uploads and downloads of large objects.

-

Event Notification: S3 can notify you of specific events in your bucket. For instance, you could set up a notification to alert you when an object is deleted from your bucket.

-

Management Features: S3 has a suite of features to help manage your data, including lifecycle management, which allows you to define rules for moving or expiring objects, versioning to keep multiple versions of an object in the same bucket, and analytics for understanding and optimizing storage costs.

-

Consistency: Amazon S3 provides read-after-write consistency for PUTS of new objects and eventual consistency for overwrite PUTS and DELETES.

-

Read-after-write Consistency for PUTS of New Objects: When a new object is uploaded (PUT) into an Amazon S3 bucket, it's immediately accessible for read (GET) operations. This is known as read-after-write consistency. You can immediately retrieve a new object as soon as you create it. This applies across all regions in AWS, and it's crucial when immediate, accurate data retrieval is required.

-

Eventual Consistency for Overwrite PUTS and DELETES: Overwrite PUTS and DELETES refer to operations where an existing object is updated (an overwrite PUT) or removed (a DELETE). For these operations, Amazon S3 provides eventual consistency. If you update or delete an object and immediately attempt to read or delete it, you might still get the old version or find it there (in the case of a DELETE) for a short period. This state of affairs is temporary, and shortly after the update or deletion, you'll see the new version or find the object gone, as expected.

-

Src: Mailbox

Src: USPS

Notes

Data is stored as an "Object."

Object storage, also known as object-based storage, manages data as objects. Each object includes the data, associated metadata, and a globally unique identifier.

Unlike file storage, there are no folders or directories in object storage. Instead, objects are organized into a flat address space, called a bucket in Amazon S3's terminology.

The unique identifier allows an object to be retrieved without needing to know the physical location of the data. Metadata can be customized, making object storage incredibly flexible.

Every object gets a UID (universal ID) and associated META data.

No Folders / SubFolders

For example, if you have an object with the key images/summer/beach.png in your bucket, Amazon S3 has no internal concept of the images or summer as separate entities—it simply sees the entire string images/summer/beach.png as the key for that object.

To store objects in S3, you must first define and create a bucket.

You can think of a bucket as a container for your data.

This bucket name must be unique, not just within the region you specify, but globally against all other S3 buckets, of which there are many millions.

Any object uploaded to your buckets is given a unique object key to identify it.

- S3 bucket ownership is not transferable.

- S3 bucket names should start with alphabets, and - is allowed in between.

- An AWS account can have a maximum of 100 buckets.

More details

https://docs.aws.amazon.com/AmazonS3/latest/userguide/object-keys.html

Other types of Storage are

File Storage

Block Storage

description: Identity Access Management

IAM

src: Aws

ARN: Amazon Resource Name

Users - Individual Person / Application

Groups - Collection of IAM Users

Policies - Policy sets permission/control access to AWS resources. Policies are stored in AWS as JSON documents.

A Policy can be attached to multiple entities (users, groups, and roles) in your AWS account.

Multiple Policies can be created and attached to the user.

Roles - Set of permissions that define what actions are allowed and denied by an entity in the AWS console. Similar to a user, it can be accessed by any type of entity.

// Examples of ARNs

arn:aws:s3:::my_corporate_bucket/*

arn:aws:s3:::my_corporate_bucket/Development/*

arn:aws:iam::123456789012:user/chandr34

arn:aws:iam::123456789012:group/bigdataclass

arn:aws:iam::123456789012:group/*

Types of Policies

Identity-based policies: Identity-based policies are attached to an IAM user, group, or role (identities). These policies control what actions an identity can perform, on which resources, and under what conditions.

Resource-based policies: Resource-based policies are attached to a resource such as an Amazon S3 bucket. These policies control what actions a specified principal can perform on that resource and under what conditions.

Permission Boundary: You can use an AWS-managed policy or a customer-managed policy to set the boundary for an IAM entity (user or role). A permissions boundary is an advanced feature for using a managed policy to set the maximum permissions that an identity-based policy can grant to an IAM entity.